Text-Guided Diffusion Models for Robust Image Manipulation: An Overview of DiffusionCLIP

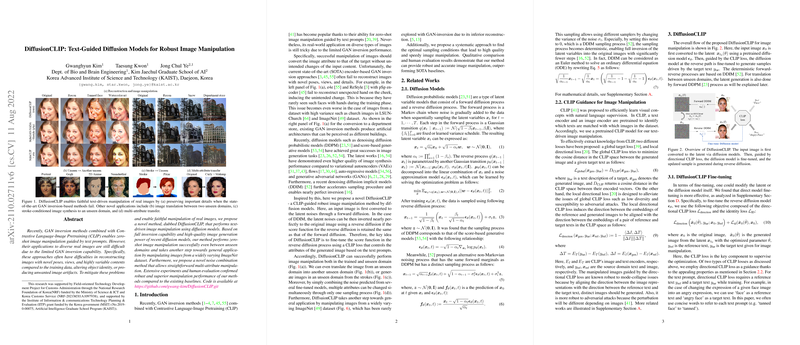

The paper "DiffusionCLIP: Text-Guided Diffusion Models for Robust Image Manipulation" addresses limitations in existing image manipulation methods utilizing Generative Adversarial Networks (GANs) and introduces DiffusionCLIP, a novel approach leveraging diffusion models. This method enhances the capability of zero-shot image manipulation guided by text prompts through the integration of Contrastive Language–Image Pre-training (CLIP).

Key Contributions

DiffusionCLIP builds upon diffusion models, such as Denoising Diffusion Probabilistic Models (DDPM) and Denoising Diffusion Implicit Models (DDIM), which have emerged as powerful tools in image generation. These models are characterized by their notable inversion capability and the high quality of image synthesis. DiffusionCLIP extends this potential by fine-tuning diffusion models with a CLIP-guided loss to perform text-driven manipulation of images even in unseen domains.

Methodological Insights

- Inversion Capability: Diffusion models employed in DiffusionCLIP allow for nearly perfect inversion, addressing the inadequacies experienced with GAN inversion methods. This ensures faithful image reconstruction and manipulation without unwanted artifacts.

- CLIP Guidance: The method uses directional CLIP loss to control image attributes according to provided text prompts. This loss encourages manipulation in the direction defined by changes in textual descriptions, offering more robust results compared to existing global CLIP losses.

- Accelerated Processing: Through forward DDIM sampling and optimized hyperparameter settings, DiffusionCLIP achieves efficient forward and reverse sampling times, enhancing its practical applicability.

- Novel Applications: The paper demonstrates several applications of DiffusionCLIP, including manipulation in unseen domains and multi-attribute adjustments. These capabilities are achieved by noise combination from multiple fine-tuned models, enabling simultaneous changes to multiple image attributes.

Experimental Results

The paper provides an extensive evaluation of DiffusionCLIP against state-of-the-art methods like StyleCLIP and StyleGAN-NADA. Notably:

- Reconstruction Quality: Quantitative analysis shows superior performance in image reconstruction metrics such as MAE, LPIPS, and SSIM.

- Manipulation Accuracy: Human evaluations indicate heightened preference for DiffusionCLIP across both in-domain and out-of-domain manipulation tasks.

- Diverse Domain Application: The method’s ability to manipulate high-resolution images from diverse datasets (e.g., ImageNet, LSUN) marks a significant advancement over traditional GAN-based techniques.

Practical and Theoretical Implications

Practically, DiffusionCLIP’s robustness in text-driven manipulation offers significant improvements in fields requiring precise image modifications, such as graphic design and content generation. Theoretically, the integration of diffusion models with CLIP represents a pivotal shift towards more reliable and context-aware generative models.

The paper suggests future directions for enhancing the accessibility and control of text-driven image manipulations. Further exploration could involve more nuanced integration of diffusion processes with varied forms of natural language understanding to refine manipulation accuracy.

This work sets a foundation for ongoing research into leveraging diffusion models for complex image manipulation tasks, with potential expansions into video or 3D content generation. The insights from DiffusionCLIP underscore a promising trajectory in AI-driven creative tools.