A Survey on Automated Fact-Checking

The paper "A Survey on Automated Fact-Checking," authored by Zhijiang Guo, Michael Schlichtkrull, and Andreas Vlachos, provides a comprehensive evaluation of the current approaches and developments in the domain of automated fact-checking. It delineates a framework that encapsulates the key components necessary for automating this task, which is increasingly crucial in the modern information environment characterized by rapid information dissemination, often leading to widespread misinformation.

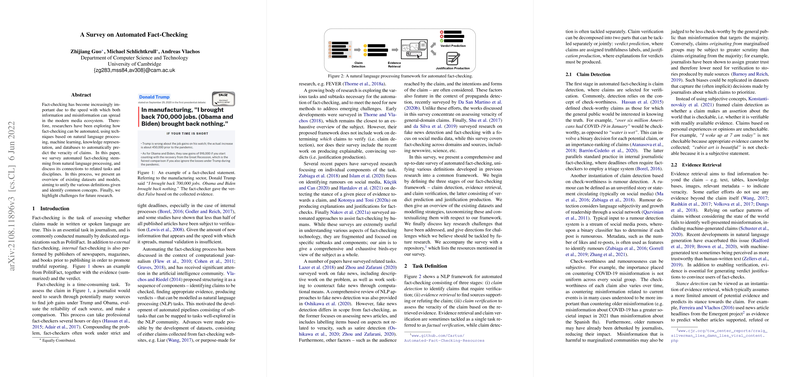

Overview of Automated Fact-Checking Framework

The authors propose a structured framework dividing automated fact-checking into three principal stages: claim detection, evidence retrieval, and claim verification. Each stage is composed of distinct tasks aimed at identifying claims, gathering supporting or refuting evidence, and finally, assessing the veracity of the claim based solely on collected evidence.

- Claim Detection: This stage involves identifying claims that require verification, which often relies on criteria such as check-worthiness. This stage typically employs classification algorithms to evaluate claims based on specific attributes like content types and contextual relevance.

- Evidence Retrieval: Following claim detection, evidence retrieval seeks to locate pertinent information from trusted sources or databases to either corroborate or contradict the claim. The process often employs information retrieval techniques or dense retrievers to identify and rank potentially relevant sources of evidence.

- Claim Verification: This final stage involves assessing the truthfulness of the claim based on the previously retrieved evidence. Fact-checking systems may adopt models analogous to Recognizing Textual Entailment (RTE) to deduce whether the evidence supports or refutes the claim.

In addition to these, the paper underlines an emergent task: justification production. This involves generating explanations that communicate how the verdict on a claim was reached, thereby enhancing the transparency and user trust in the fact-checking systems.

Datasets and Modelling Approaches

The paper provides an extensive survey of existing datasets, highlighting those that cater to different stages of the fact-checking process. It discusses datasets with both natural and artificial claims, evidencing the diversity in input types and the corresponding challenges in retrieving relevant evidence.

The authors extensively scrutinized various modeling strategies adopted across the claim detection, evidence retrieval, and verification stages, ranging from simple feature-based classifiers to complex neural network architectures. They notably discuss the utility of pipeline approaches versus end-to-end learning systems, alongside recent advancements in leveraging transformer models for more accurate retrieval and verification tasks.

Challenges and Future Directions

The paper identifies several open challenges that continue to impede progress in automated fact-checking:

- Labeling Complexity: The nuanced nature of claim veracity labels creates data annotation challenges. Automating the extraction of multi-faceted labels remains an intricate task due to the inherent complexities in defining truth.

- Source and Subjectivity: Trustworthiness of sources, often viewed as subjective depending on the social or political context, poses significant challenges for evidence retrieval. Developing systems that account for variance in source reliability is crucial for improving factual accuracy.

- Bias and Dataset Artifacts: The paper acknowledges biases inherent in datasets, often derived from synthetic data creation methods. These biases necessitate innovative strategies to create bias-mitigated models.

- Faithfulness and Multimodal Verification: Faithful representation of model decision-making processes in justification production is pivotal yet currently under-researched. Additionally, extending fact-checking capabilities to multimodal inputs like images and videos remains a daunting task.

The authors emphasize that future studies should focus on these challenges, proposing the development of sophisticated models capable of handling multiple languages, accommodating subjective source reliability, and incorporating multimodal data.

Conclusion

This survey paper significantly contributes to the field by structuring the complex processes involved in automated fact-checking into a coherent framework and by mapping out the current landscape of research developments. It serves as a vital resource for researchers seeking to understand the intricacies involved in this niche domain, addressing both the methodological advancements and the pressing challenges that lie ahead. As misinformation continues to proliferate across digital platforms, the research and development of robust automated fact-checking systems remain an essential endeavor in promoting accurate public discourse.