Evaluation of Automatic Metrics for Machine Translation

This paper presents a comprehensive evaluation of automatic metrics used in the assessment of machine translation (MT) systems, emphasizing their efficacy compared to human judgments. The authors identify an overarching reliance on automatic metrics, often in place of more time-consuming and costly human evaluations, which can lead to incorrect determinations of system quality and suboptimal development directions. By leveraging what is identified as the largest collection of human judgment data in the field—comprising 2.3 million sentence-level evaluations across 4380 systems—the authors provide detailed insights into the alignment between various metrics and human assessments.

Key Findings

- Comparison with Human Judgments: The authors demonstrate that automatic metrics often poorly approximate human judgment. Common metrics tend to be influenced by specific factors, such as translationese phenomena, or they neglect the gravity of translation errors. This can result in misguided development decisions.

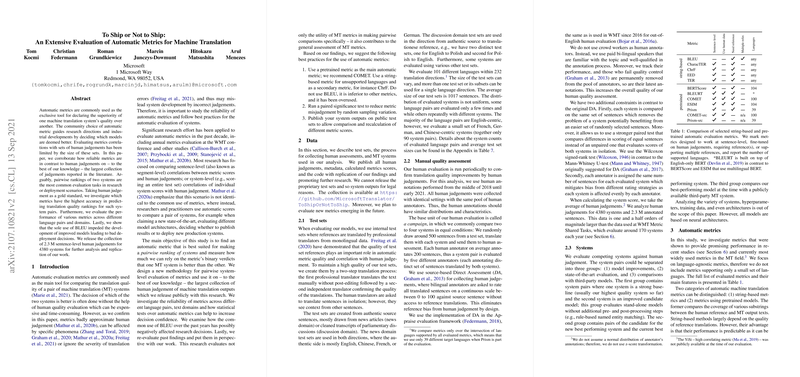

- Metric Evaluation Methodology: The paper proposes a novel methodology for the pairwise system-level evaluation of metrics, focusing on their capacity to predict quality rankings. By considering human judgment as the gold standard, they evaluate which metrics most accurately predict translation quality rankings.

- Limitation of BLEU: The paper highlights that over-reliance on BLEU could have stymied advancements in MT model development. BLEU's inadequacy contributes to incorrect deployment decisions, masking potential improvements in system outputs.

- Recommendations: The paper suggests best practices for utilizing automatic metrics:

- Use pretrained models like COMET as primary metrics.

- Employ string-based metrics like ChrF for unsupported languages and secondary verification.

- Avoid BLEU given its inferior performance.

- Conduct paired significance tests to mitigate erroneous judgments due to random variation.

- Release system outputs on public test sets for reproducibility and further analysis.

- Metric Performance: Pretrained methods generally surpass string-based metrics in accuracy across various scenarios, including language pairs and domains. COMET emerges as the most reliable metric for pairwise comparisons, while ChrF is recommended for scenarios where more advanced metrics are infeasible.

Implications and Future Directions

The findings have practical implications that underline the need for a shift in the standard practices of MT evaluation. The clear inadequacy of BLEU as a standalone metric suggests that the community should transition towards adopting more complex, yet reliable metrics like COMET. This shift should be strategically implemented to ensure that innovation is not thwarted by historical inertia or ease of use.

The release of the extensive human judgment dataset provides an invaluable resource for replication studies and the development of future metrics. Such openness in data sharing fosters collaborative efforts to refine MT systems and their evaluation, which is critical for the field's long-term progression.

On a theoretical level, the research points to a broader understanding of how automatic metrics can be optimized to reflect human judgment more accurately. The effectiveness of COMET-src as a reference-free metric opens new avenues for evaluating translation outputs in resource-constrained contexts.

In conclusion, this paper provides robust evidence for re-evaluating the metrics traditionally used in MT research and proposes a clear path forward for enhancing metric reliability, resulting in more accurate assessments of machine translation systems. The continued evolution and adoption of refined metrics will undoubtedly play a pivotal role in the field's advancement.