Overview of ChineseBERT: Chinese Pretraining Enhanced by Glyph and Pinyin Information

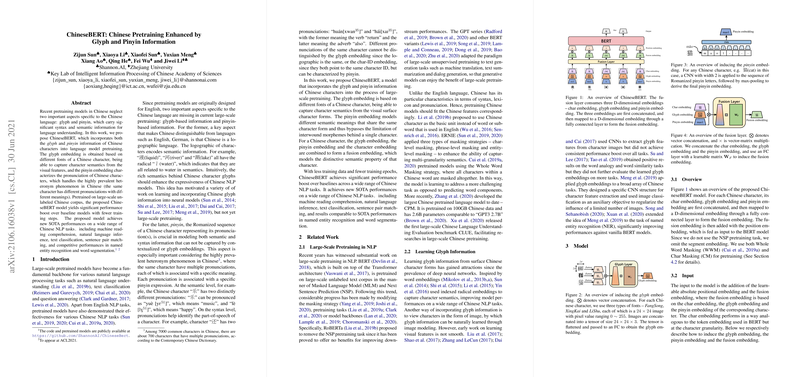

The paper presents ChineseBERT, a novel approach to pretraining LLMs specifically tailored for the Chinese language by integrating glyph and pinyin information. This methodology addresses the limitations of traditional pretraining models, which often overlook distinctive features of Chinese characters.

Key Features of ChineseBERT

ChineseBERT incorporates two critical components unique to Chinese:

- Glyph Information: Chinese is characterized by its logographic scripts, where characters often embody semantic hints through their visual components. The model captures these visual semantics by embedding glyph information based on multiple fonts for each character, thereby enhancing its ability to understand nuanced meanings that are visually apparent.

- Pinyin Information: The pronunciation of Chinese characters is encapsulated in Romanized forms called pinyin. This aspect addresses polyphonic phenomena where a single character may have different pronunciations and meanings. By embedding pinyin, ChineseBERT effectively disambiguates homographs, improving the model’s ability to grasp both syntactic and semantic facets of the language.

Performance Evaluation

The introduction of glyph and pinyin embeddings has resulted in significant improvements over baseline models across several Chinese NLP tasks. Notably, ChineseBERT sets new state-of-the-art (SOTA) benchmarks on tasks such as machine reading comprehension, natural language inference, text classification, and sentence pair matching. Even in named entity recognition and word segmentation, ChineseBERT achieves competitive performances.

Comparison with Existing Models

Compared to other pretraining approaches like ERNIE, BERT-wwm, and MacBERT, ChineseBERT demonstrates superior performance with fewer training steps. This efficiency is attributed to the additional semantic depth provided by glyph and pinyin information, which acts as a regularizer, allowing the model to converge faster even with less data.

Implications and Future Directions

The integration of glyph and pinyin into ChineseBERT not only provides tangible improvements in task performance but also suggests a pivotal direction for future work in language-specific pretraining models. It highlights the importance of incorporating language-specific features to achieve superior natural language understanding.

Future developments could explore extending this approach to other logographic languages, enhancing cross-linguistic NLP capabilities, and possibly investigating hybrid models that can incorporate more multimodal information to further improve semantic understanding. Additionally, training larger models and experimenting with diverse datasets could yield further insights and refinements.