Efficient Passage Retrieval with Hashing for Open-domain Question Answering

The paper "Efficient Passage Retrieval with Hashing for Open-domain Question Answering" by Yamada et al. addresses a critical challenge in open-domain question answering (QA): the memory-intensive nature of passage retrieval models. By leveraging a novel Binary Passage Retriever (BPR), the authors propose an efficient method that significantly reduces memory requirements while maintaining retrieval accuracy.

Overview of the Approach

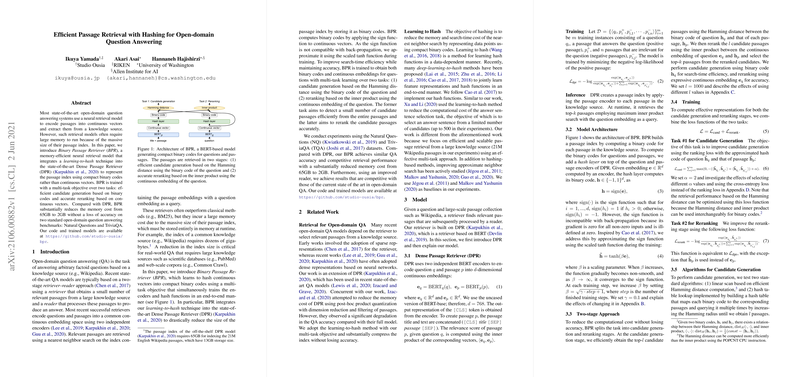

Many contemporary QA systems rely on a two-stage retriever-reader framework, notably exemplified by Dense Passage Retrievers (DPR). These models represent passages as continuous vector embeddings, requiring substantial memory to maintain extensive passage indices, such as those derived from large knowledge bases like Wikipedia.

BPR innovatively integrates a learning-to-hash mechanism with DPR to encode passage indices into compact binary codes instead of continuous vectors. This transition from continuous to binary representation enables a drastic reduction in memory usage—shrinking the storage necessity from 65GB to a mere 2GB—without compromising accuracy on established benchmarks like Natural Questions (NQ) and TriviaQA (TQA).

Methodological Insights

BPR employs a multi-task learning approach, simultaneously optimizing two objectives: efficient candidate generation using binary codes and accurate passage reranking using continuous vectors. Binary codes are produced by the application of a sign function to embeddings, with the function approximated by a scaled tanh operation to facilitate backpropagation during training.

The retrieval model operates in two stages:

- Candidate Generation: Uses Hamming distance computations on binary codes, ensuring rapid retrieval of candidate passages.

- Reranking: Employs inner product calculations on continuous embeddings to order candidate passages accurately.

Experimental Findings

BPR was validated on the NQ and TQA datasets. The results demonstrate its competitive retrieval efficacy—a top-20 recall comparable to full DPR systems—with a dramatically reduced memory footprint. Additionally, the incorporation of an enhanced reader enables BPR to match or exceed the QA accuracy of much larger models while utilizing fewer parameters.

In comparison to other memory-optimized models like those using Hierarchical Navigable Small World (HNSW) graphs or product quantization, BPR offers a balanced trade-off between storage efficiency and query performance.

Implications and Future Directions

The introduction of BPR not only has practical implications—facilitating less resource-intensive deployment of QA systems on large-scale knowledge sources—but also opens avenues for further exploration in retrieval efficiency. The outcomes promote future research in integrating advanced hashing methods and optimizing multi-task learning for retrieval tasks.

By providing a substantially compressed representation without loss of performance, BPR exemplifies an efficient retrieval model that meets contemporary demands for scalable QA systems. This work encourages further exploration into optimizing the alignment between retrieval and reader functionalities, potentially incorporating more sophisticated neural architectures and training regimes.