Vector Neurons: A General Framework for SO(3)-Equivariant Networks

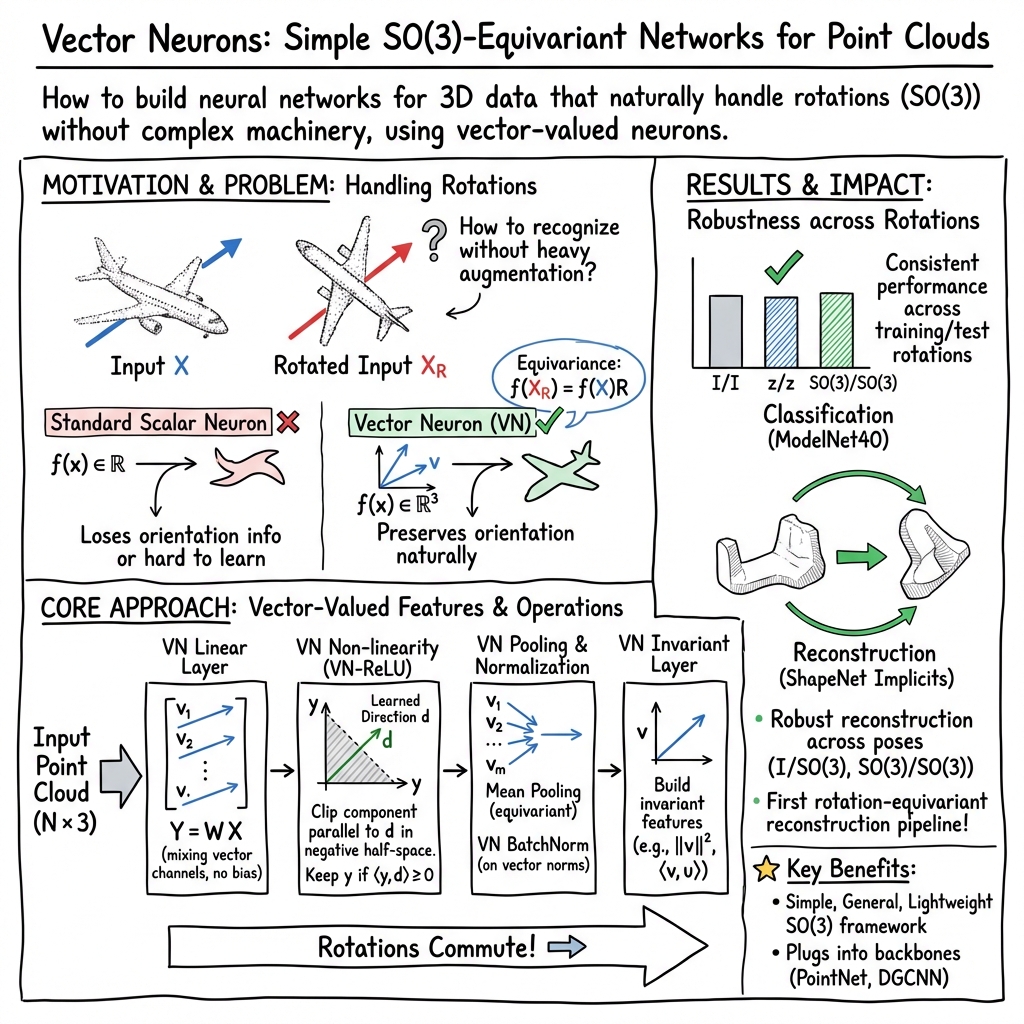

Abstract: Invariance and equivariance to the rotation group have been widely discussed in the 3D deep learning community for pointclouds. Yet most proposed methods either use complex mathematical tools that may limit their accessibility, or are tied to specific input data types and network architectures. In this paper, we introduce a general framework built on top of what we call Vector Neuron representations for creating SO(3)-equivariant neural networks for pointcloud processing. Extending neurons from 1D scalars to 3D vectors, our vector neurons enable a simple mapping of SO(3) actions to latent spaces thereby providing a framework for building equivariance in common neural operations -- including linear layers, non-linearities, pooling, and normalizations. Due to their simplicity, vector neurons are versatile and, as we demonstrate, can be incorporated into diverse network architecture backbones, allowing them to process geometry inputs in arbitrary poses. Despite its simplicity, our method performs comparably well in accuracy and generalization with other more complex and specialized state-of-the-art methods on classification and segmentation tasks. We also show for the first time a rotation equivariant reconstruction network.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces a simple way to build 3D deep learning models that handle rotations naturally. The authors propose “Vector Neurons,” which means instead of neurons holding single numbers, they hold 3D arrows. Because arrows rotate the same way objects do, the network can understand shapes correctly no matter how they’re turned. This helps tasks like recognizing objects, labeling parts of a shape, and rebuilding 3D models to work reliably even when the input is rotated.

What questions did the researchers ask?

- How can we make 3D neural networks work well on shapes no matter how those shapes are rotated in space?

- Can we design a general set of building blocks (layers) that make common network operations “rotation-friendly” without complicated math or special tricks?

- Will this approach work across different tasks (classification, segmentation, and reconstruction) and different network types (like PointNet and DGCNN)?

- Can such a simple idea match or beat more complex methods, especially when inputs are seen at random rotations?

How did they do it? Methods and approach

Think of a neural network as a machine that passes information through layers. Normally, each neuron holds a single number (a “scalar”). This paper changes that: each neuron holds a 3D vector—like a small arrow in space. Here’s why that helps:

- Rotations in 3D (called SO(3)) spin the whole space. If your neurons are arrows, they rotate in the same way the input points rotate. That makes it easier to keep track of orientation correctly.

To make a full “rotation-aware” toolbox, the authors redesigned standard layers using vector neurons:

- Linear layers: These mix and combine arrows across channels. Because mixing is done the same way before and after a rotation, the layer’s output rotates exactly as the input does. That’s called “equivariance.”

- Non-linearities (like ReLU): Standard ReLU works on numbers, not arrows. So they created a vector version that uses a learned direction (another arrow) as a reference. If an input arrow points “with” that direction, it passes through; if it points “against” it, they clip the opposing part. Since the reference direction is computed from the data in a rotation-aware way, this keeps the network rotation-equivariant.

- Pooling: Pooling combines information. Mean pooling is already rotation-friendly for arrows. They also designed a vector max-pooling that chooses arrows that align best with learned directions.

- Normalization: Normalization stabilizes training. Batch normalization for arrows is done on arrow lengths (which are rotation-invariant), avoiding problems caused by mixing different poses.

- Invariant layer: For tasks like classification and segmentation, you want the final answer not to change when the input rotates. They create features from arrows that don’t change under rotation (like lengths and certain products of arrows), so the final output is rotation-invariant.

They plugged these vector neuron layers into two popular 3D point cloud networks:

- VN-PointNet: A vector-neuron version of PointNet.

- VN-DGCNN: A vector-neuron version of DGCNN (which uses local neighborhoods and edge features).

They tested on:

- Classification (ModelNet40): Predict the object category.

- Part segmentation (ShapeNet Part): Label each point with the part it belongs to.

- 3D reconstruction (ShapeNet): Rebuild the shape as an implicit function from a sparse, noisy point cloud.

What did they find and why it matters?

Here are the key results, explained simply:

- The vector neuron networks performed strongly when the test shapes were rotated in ways the network hadn’t seen during training. This shows true rotation robustness without relying on heavy data augmentation.

- VN-DGCNN achieved top accuracy among methods that use only point coordinates in classification and segmentation when testing with random 3D rotations.

- They built, for the first time, a rotation-equivariant reconstruction network: the encoder is rotation-equivariant, and the decoder produces rotation-invariant outputs. This makes reconstruction consistent across poses.

- Their approach is simple and lightweight. It often uses fewer parameters than standard networks and avoids complex math that can be hard to implement.

- In cases where shapes are perfectly aligned (no rotations), some traditional methods can be slightly more accurate in reconstruction. But the VN approach shines when rotation randomness is present.

To make this easier to digest, here’s a short list summarizing the main findings:

- Works well across tasks and architectures under random rotations.

- Comparable or better than more complex rotation-specialized methods.

- First demonstration of a rotation-equivariant network for 3D reconstruction.

- Simpler, fewer parameters, and easy to plug into existing models.

What is the impact of this research?

This research suggests a practical path to build 3D models that “just work” regardless of object orientation. That matters for:

- Robotics and drones: Objects won’t always face the same way, so recognizing them reliably is crucial.

- Augmented/virtual reality: Users move devices, and virtual objects rotate—robustness is important.

- Self-driving cars and phones with depth sensors: Scanned objects appear in many poses; rotation-aware models reduce errors.

Because vector neurons are simple and general, they can be used in many network designs and possibly extended to other data types (meshes, voxels, even images) or other transformation groups (like scaling). By reducing the need for careful data alignment and heavy augmentation, this approach can make 3D learning systems more reliable and easier to build.

Collections

Sign up for free to add this paper to one or more collections.