Overview of "Towards Open-World Text-Guided Face Image Generation and Manipulation"

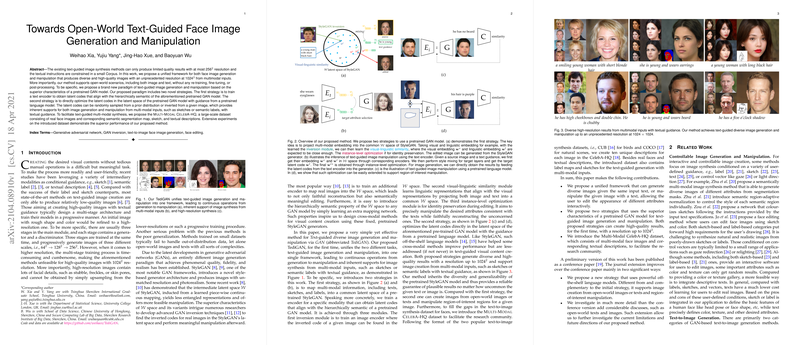

The paper presents TediGAN, a unified framework for text-guided image generation and manipulation using pretrained generative adversarial networks (GANs), specifically focusing on face images. TediGAN achieves high-resolution image synthesis and manipulation from multimodal inputs, such as text, sketches, and semantic labels, without additional training or post-processing.

Methodology

Pretrained GAN Utilization

The core of TediGAN's approach relies on the use of a pretrained StyleGAN model, leveraging its latent space for both image generation and manipulative tasks. This pretrained model provides a rich, semantically meaningful space that can be exploited by additional encoders.

Image and Text Encoding

TediGAN introduces two key strategies for aligning multimodal inputs with the pretrained GAN's latent space:

- Trained Text Encoder Approach: This strategy involves a dedicated text encoder trained to project linguistic inputs into the same space as visual inputs. The visual-linguistic similarity module ensures semantically meaningful alignment by leveraging StyleGAN's hierarchical latent structure.

- Pretrained LLMs: The second strategy incorporates pretrained LLMs like CLIP to directly optimize latent codes with guidance from text-image similarity scores.

Instance-Level Optimization

For image manipulation tasks, TediGAN utilizes an instance-level optimization module, which enhances identity preservation and precise attribute modification. This is achieved by further refinements on the inverted latent codes, guided by both pixel-level and semantic criteria.

Integration and Control Mechanisms

A style mixing mechanism is employed to facilitate diverse outputs and precise control over attributes, utilizing the layer-specific semantic knowledge of the latent space. This mechanism enables targeted editability based on textual instructions or other modalities such as sketches.

Experimental Results

The introduction of the Multi-Modal CelebA-HQ dataset provides a robust benchmark for evaluating TediGAN against state-of-the-art models, including AttnGAN, DM-GAN, and ManiGAN. Quantitative evaluations using metrics such as FID and LPIPS, alongside user studies for realism and text-image coherence, demonstrate TediGAN's superior performance in generating high-quality, consistent, and photo-realistic results.

Practical and Theoretical Implications

Practically, TediGAN proposes significant advancements for applications requiring seamless transition between generation and manipulation tasks at high resolutions and diverse conditions. Theoretically, it highlights the potential of pretrained GAN models in open-world scenarios and multifaceted input settings, showcasing possibilities for further development in cross-modal image synthesis.

Future Directions

Future research should address the limitations related to GAN's inherent biases and the high computational costs of instance-specific optimizations. Extending the framework to support broader classes and enhance real-time efficiency remains a promising area for exploration.

Overall, TediGAN presents a compelling approach to unified image synthesis and manipulation, emphasizing the synergy between pretrained models and multimodal inputs. The framework provides insightful pathways for leveraging GANs in generating and modifying images rich in detail and semantic coherence.