Evaluating the Efficacy of Rainbow Memory for Continual Learning

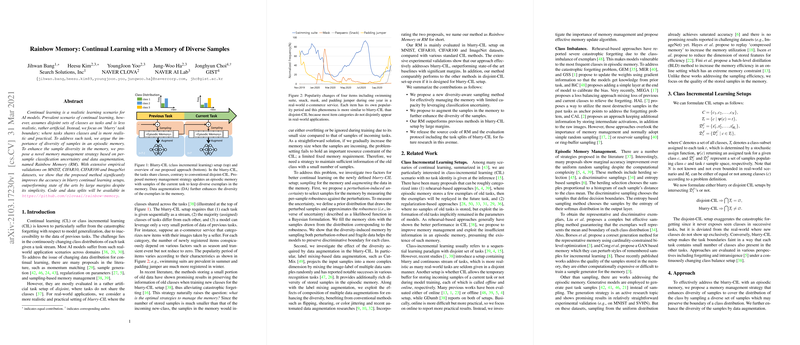

The paper "Rainbow Memory: Continual Learning with a Memory of Diverse Samples" presents a novel approach to address the challenges associated with continual learning (CL), particularly in the more realistic and complex scenario where class boundaries are blurry rather than disjoint. Continual learning, or class incremental learning (CIL), often suffers from catastrophic forgetting due to the inability of models to access past task data. The complexity increases when tasks share classes, a scenario referred to as blurry task boundary. The authors propose a method named Rainbow Memory (RM), which enhances the diversity of samples stored in an episodic memory, leveraging per-sample classification uncertainty and data augmentation.

Overview of Contributions

- Novel Memory Management Strategy: The Rainbow Memory introduces a strategy that enhances sample diversity based on per-sample classification uncertainty. This approach relies on measuring the uncertainity of samples by calculating the variance in model outputs for perturbed samples via various data augmentation techniques. The samples that best represent this diversity are selected for storage, aiming to cover both robust and fragile samples respecting class boundaries.

- Data Augmentation for Diversity Enhancement: A critical addition to the approach is the use of data augmentation, both traditional and model-enhanced, such as CutMix and AutoAugment. This augmentation further increases the diversity within the memory, allowing better generalization over transitions between tasks.

- Experimental Validation Across Benchmarks: The proposed method was thoroughly tested across multiple datasets such as MNIST, CIFAR10, CIFAR100, and ImageNet. The empirical results demonstrate significant improvements in accuracy for blurry continual learning setups compared to existing state-of-the-art methods, confirming the efficacy of RM’s approach to managing the memory in CL scenarios.

- Robustness in Blurry and Disjoint CIL Setups: Beyond the primary focus on blurry-CIL, RM also performs well under traditional disjoint-CIL setups, suggesting its broader applicability.

Implications and Future Directions

The Rainbow Memory model's clear advantage in handling the blurry boundaries of class incremental scenarios holds significant implications for real-world applications, where data streams with overlapping class distributions are common. By improving memory management to focus on diversity, such models can maintain performance across evolving tasks without succumbing to catastrophic forgetting.

The use of data augmentation techniques—particularly the automated selections like AutoAugment—presents a compelling direction for enhancing continual learning systems. This work could further be extended to investigate alternate automated data augmentations or optimized uncertainty measures that could align with more advanced neural architectures.

Potential future exploration could involve addressing resource constraints in embedded systems where maintaining large episodic memories is challenging. A cross-disciplinary approach with advancements in edge computing and memory-efficient algorithms might expand RM's applicability.

Conclusion

In sum, "Rainbow Memory: Continual Learning with a Memory of Diverse Samples" offers a significant contribution to continual learning research by presenting a practical and effective strategy to address critical forgetting issues in complex, realistic learning scenarios. It opens doors for continued innovation in learning with evolving data streams, establishing a solid foundation for forthcoming advancements.