Autonomous, Bidirectional, and Iterative LLMing for Scene Text Recognition

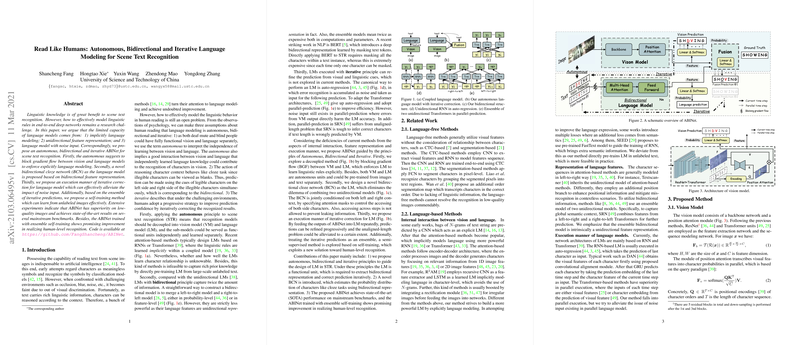

The paper "Read Like Humans: Autonomous, Bidirectional and Iterative LLMing for Scene Text Recognition" addresses the challenge of effectively incorporating linguistic knowledge into scene text recognition networks. Recognizing text in diverse and challenging environments, such as occluded or low-quality scenes, is a pressing issue in computer vision. This paper proposes a novel framework, ABINet, that adopts an autonomous, bidirectional, and iterative (ABI) approach to improve scene text recognition by explicitly modeling linguistic rules.

Key Contributions

- Autonomous Modeling: The authors identify the limitations of implicit LLMing and propose separating vision and LLMs. By blocking gradient flow between vision and language components, the ABINet enforces direct learning of linguistic patterns. This decoupling also allows for pre-training from large-scale datasets independently, enhancing LLM capabilities without vision bias.

- Bidirectional Representation: A novel bidirectional cloze network (BCN) is utilized as the LLM. Unlike traditional unidirectional models that may miss contextual information, BCN leverages a holistic view to improve feature abstraction. This design ensures richer inference by conditioning predictions on both preceding and succeeding contexts within a text sequence.

- Iterative Correction: To address noise issues in predictions, ABINet employs an iterative correction mechanism. The framework iteratively refines predictions, effectively reducing the impact of incorrect initial inputs. This progressive refinement is crucial for scenarios with high degrees of visual ambiguity.

The ABINet's adoption of these principles leads to a model that mirrors human-like reading capabilities, leveraging both visual and linguistic cues for improved accuracy.

Experimental Validation

Extensive experimentation demonstrates the effectiveness of ABINet across multiple datasets, including challenging benchmarks like IC15, SVTP, and CUTE80. The model consistently achieves state-of-the-art performance, particularly excelling in conditions with low-quality inputs. Notably, the ABINet trained with ensemble self-training from unlabeled data showed significant performance gains, highlighting the potential of semi-supervised learning approaches.

The paper presents detailed ablation studies that underscore the superiority of each novel component, such as the bidirectional cloze network and iterative strategy. For example, the autonomous approach outperforms traditional models by allowing independent and explicit linguistic learning, while the bidirectional representation captures additional context, enhancing recognition accuracy.

Implications and Future Directions

The significant performance improvements suggest that ABINet's framework could be effectively applied to other sequential prediction tasks where context plays a vital role. Additionally, the potential of pre-training LLMs on large unlabeled datasets presents a lucrative direction for enhancing linguistic models in computer vision applications.

Looking forward, this research may also inspire further investigations into the integration of more sophisticated linguistic models and the exploration of unsupervised learning paradigms. By leveraging large, unlabeled datasets, future work could push the boundaries of what is achievable in scene text recognition, paving the way for new applications and technologies.

Overall, ABINet provides a robust foundation for future advancements in scene text recognition by aligning machine reading strategies closely with human capabilities.