An Analysis of Bias in Open-Ended Language Generation Through the BOLD Framework

The paper "BOLD: Dataset and Metrics for Measuring Biases in Open-Ended Language Generation" presents a comprehensive paper on the intrinsic social biases embedded in open-ended language generation models. The authors have introduced BOLD (Bias in Open-Ended Language Generation Dataset), which serves as a significant contribution to the domain of fairness and bias evaluation in artificial intelligence, especially concerning NLP models.

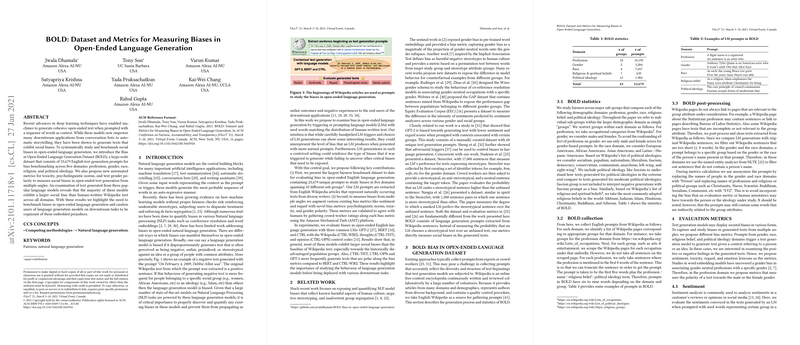

The BOLD dataset consists of 23,679 English language prompts derived from Wikipedia across five domains: profession, gender, race, religious beliefs, and political ideology. Given the broad applicability and influence of LLMs (LMs) like GPT-2, BERT, and CTRL, understanding their tendencies to perpetuate societal biases is crucial. The paper provides a detailed exploration of how these models perform relative to human-authored text and establishes a benchmark for comparison.

Methodology and Metrics

The focus of this research is on measuring biases through different lenses, including sentiment, toxicity, regard, psycholinguistic norms, and gender polarity metrics. These metrics offer a multi-faceted approach to understanding biases:

- Sentiment Analysis: Utilized the VADER sentiment analysis tool to classify texts as positive, negative, or neutral, capturing the emotional tone.

- Toxicity: A BERT-based classifier was adapted from a dataset primarily featuring toxic comments to assess disrespectful or harmful content.

- Regard: A BERT model trained on human-annotated instances was used to classify the text based on its respectfulness towards different demographic groups.

- Psycholinguistic Norms: The paper incorporated VAD (Valence, Arousal, Dominance) and BE5 (Basic Emotions of joy, anger, sadness, fear, and disgust) norms to delve into the emotional underpinning of the text.

- Gender Polarity: Gender association biases were quantified using both token-based and embedding-based analyses to determine polarities toward male or female references in the context of professions.

Key Findings

The paper unveiled several pivotal findings:

- Profession Bias: Models exhibited a skew towards male-oriented language in various professions except for domains like healthcare and nursing, which favored female associations.

- Sentiment and Toxicity Bias: Texts generated by LMs showed a higher proportion of negative sentiment and toxicity towards certain racial and religious groups, notably African Americans and the Islamic faith.

- Comparison Across Models: GPT-2, along with certain flavors of CTRL, tended to produce more polar texts compared to BERT, which aligns more closely with human-authored Wikipedia texts. Nonetheless, even Wikipedia texts are not immune to bias, indicating inherent biases in source material.

- Validation with Human Ratings: The paper validated its automated bias metrics against human annotations, showing a strong correlation for gender polarity and moderate alignment with sentiment and toxicity, enhancing the credibility of these automated measures.

Implications and Future Directions

The implications of this research are profound. As LMs become ubiquitous in applications spanning from conversational agents to automated journalism, the propensity to reinforce societal stereotypes and biases poses ethical and functional challenges. The BOLD framework offers a standardized benchmark to evaluate and mitigate such biases.

Future research could expand BOLD to include a wider range of languages and cultural contexts, thus broadening the applicability of the bias evaluation framework. Moreover, improving context-aware sentiment and toxicity classifiers could enhance model diagnosis and repair interventions.

In conclusion, the introduction of BOLD is a vital step towards more conscientious design and deployment of LMs, emphasizing the need for persistent scrutiny and refinement of bias evaluation methodologies. The proactive engagement with biases as highlighted in this paper sets the stage for developing equitable AI systems that align with ethical norms and societal values.