An Expert Overview of CLEAR: Contrastive Learning for Sentence Representation

The paper presents an innovative approach to sentence representation learning through contrastive learning, titled CLEAR (Contrastive LEArning for sentence Representation). The research focuses on improving sentence representation by adopting contrastive learning techniques enhanced with various augmentation strategies, specifically at the sentence level. These techniques include word and span deletion, reordering, and synonym substitution, which are explored in the context of enhancing pre-trained LLMs' ability to grasp and represent sentence semantics robustly and accurately.

Methodology and Framework

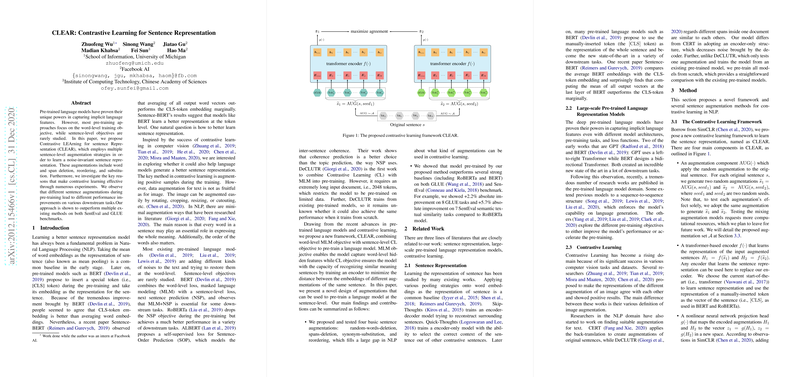

The authors propose CLEAR as a framework that integrates both word-level and sentence-level learning objectives. The framework takes inspiration from advances in contrastive learning in computer vision and examines its applicability to NLP. The main framework comprises:

- Augmentation Component: Employs random augmentations to generate two augmentations for each sentence, designed to make models robust to noise while preserving semantics.

- Encoder with Transformer Architecture: Utilizes the current state-of-the-art transformer architecture to capture nuanced sentence representations.

- Nonlinear Projection Head: Aids in mapping the output of the encoder to a representation space where contrastive learning is applied.

- Contrastive Loss Function: Optimizes the model by maximizing agreement between different augmentations of the same sentence, helping achieve sentence-level objectives.

Empirical Evaluation and Results

The proposed CLEAR framework was evaluated against established benchmarks, such as SentEval and GLUE. Noteworthy findings include:

- Performance on GLUE: Models pre-trained using the CLEAR framework showed superior performance across multiple tasks in the GLUE benchmark, achieving up to +2.2% improvement over RoBERTa on several tasks. Specifically, the models exhibited strong gains on tasks involving language inference and sentence similarity, corroborating the effectiveness of sentence-level representation.

- SentEval Benchmark: In semantic textual similarity tasks, CLEAR demonstrated substantial improvements, with an average increase of +5.7% compared to baseline RoBERTa models. This emphasizes CLEAR's contribution to enhancing semantic understanding in NLP models.

Implications and Future Work

The research highlights the impact of employing sentence-level objectives in pre-training models, suggesting practical implications for improved NLP applications, particularly in tasks that require nuanced sentence-level comprehension. Scientifically, it signifies the potential of contrastive learning in broadening the applicability and robustness of LLMs.

The findings also suggest avenues for future research. This includes fine-tuning augmentation techniques tailored for specific tasks or integrating adaptive meta-learning to dynamically adjust augmentation strategies. Further exploration of hyperparameter optimization could enhance the adaptability and performance of CLEAR across diverse datasets.

Overall, the contrastive learning framework CLEAR represents a significant advancement in sentence representation methodologies, offering a comprehensive strategy to leverage augmentation and contrastive learning for developing robust, versatile NLP models. The approach not only validates the benefit of mixing word-level and sentence-level training objectives but also sets a foundation for the next generation of NLP tasks requiring deep semantic understanding.