An Essay on SpAtten: Efficient Sparse Attention Architecture with Cascade Token and Head Pruning

The paper, "SpAtten: Efficient Sparse Attention Architecture with Cascade Token and Head Pruning," presents a domain-specific accelerator designed specifically for attention mechanisms, which are fundamental to contemporary NLP models like Transformer, BERT, and GPT-2. The work acknowledges the inefficiencies in traditional hardware platforms, such as CPUs and GPUs, when executing attention inference partially due to complex data movement and low arithmetic intensity.

Key Contributions

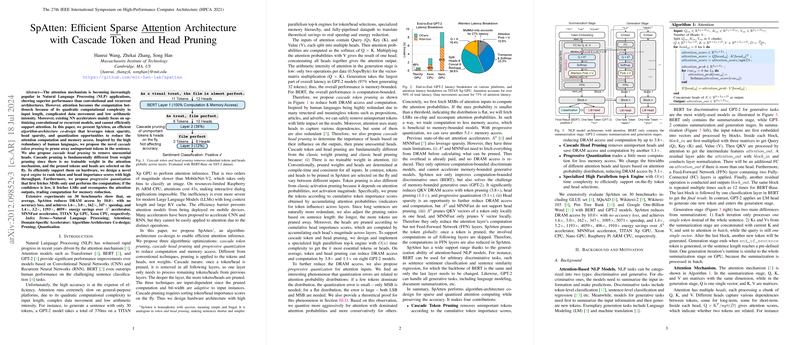

The authors introduce several algorithmic optimizations that focus on reducing computation and memory access through innovative techniques:

- Cascade Token and Head Pruning: This method leverages the inherent redundancy in human languages, pruning unimportant tokens and heads across layers. This pruning is dynamic and input-dependent, distinguishing it from traditional weight pruning as there is no trainable weight in attention mechanisms. These techniques decrease DRAM access significantly, by up to 3.8 times for tokens and 1.1 times for heads on GPT-2 models.

- Progressive Quantization: This innovation trades computation for reduced memory access by initially fetching only the most significant bits (MSBs) and utilizing lower bit representations for inputs whose attention probability distributions are dominated by a few tokens. This approach offers an additional reduction of 5.1 times in memory access.

- High Parallelism top-k Engine: To support on-the-fly token and head selection, a novel top-k engine with time complexity is designed. It efficiently ranks token and head importance scores with high throughput, translating theoretical savings to real speedup and energy reduction.

Numerical Results and Performance

The paper provides substantial numerical evidence of the efficiency gains achieved by SpAtten. Evaluations conducted on 30 benchmarks, including datasets like GLUE and SQuAD, show that SpAtten outperforms state-of-the-art accelerators such as A3 and MNNFast. This is evidenced by an average DRAM access reduction of 10 times with no degradation in accuracy. Furthermore, SpAtten achieves up to 162, 347, 1095, and 5071 times speedup over TITAN, Xeon, Nano, and Raspberry Pi ARM, respectively.

Implications and Speculations for Future Developments

Practically, SpAtten enables NLP models to be deployed efficiently on resource-constrained devices, thus expanding the applicability of these models in mobile and edge computing environments. Theoretically, the proposed co-design illustrates the potential of integrating algorithmic sparsity with hardware specialization to address the computational demands of modern NLP.

The idea of cascade pruning could inspire further research into dynamic sparsity mechanisms in other domains, such as computer vision, where structured sparsity might further aid acceleration. Additionally, the novel approach of progressive quantization could be expanded to other types of neural network architectures to optimize memory usage without sacrificing performance.

Future developments in AI may see an increased focus on creating co-designed systems tailored for specific tasks or applications. As models continue to grow in complexity and size, the need for efficient, specialized hardware accelerators like SpAtten will only become more pronounced.

Conclusion

In conclusion, SpAtten offers a robust solution to the inefficiencies faced by attention-based NLP models on conventional platforms. By introducing innovative pruning and quantization techniques, SpAtten not only closes the gap between theoretical efficiency and practical performance but also sets a precedent for future research into hardware-efficient model designs.