Document-Level Relation Extraction with Adaptive Thresholding and Localized Context Pooling

This essay ponders over the contributions made by the paper titled "Document-Level Relation Extraction with Adaptive Thresholding and Localized Context Pooling." The paper introduces two techniques designed to enhance document-level relation extraction (RE): adaptive thresholding and localized context pooling, both of which address the inherent complexities found in cross-sentence relational data. Document-level RE tasks extend beyond sentence-level RE models by requiring the extraction of relations among multiple entity pairs across entire documents, often with each pair representing several relationship types concurrently.

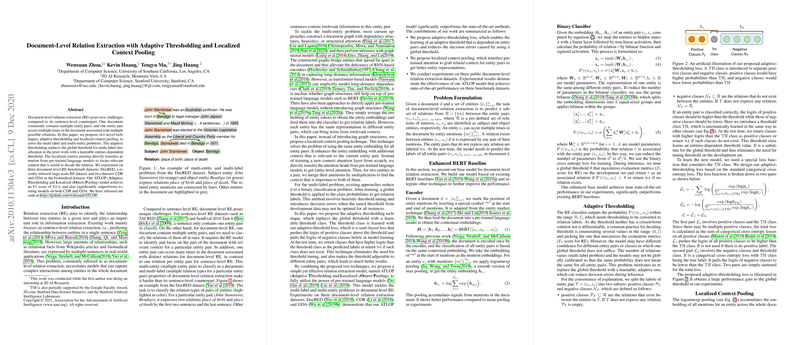

The adaptive thresholding method is a formidable alternative to the conventional global threshold used in multi-label classifications. The method intelligently assigns learnable, entity-pair-specific thresholds instead of relying on heuristic-driven global thresholds that may not suit every entity pair effectively. This is operationalized through an adaptive-threshold loss function that distinctly separates positive relational classes from the negative within the scope of each document. By adopting this rank-based loss approach, the method ensures the model learns tailored thresholds that reduce errors caused by conventional methods, improving both precision and recall during inference.

On the other hand, localized context pooling addresses the challenge of noisy or ambiguous entity embeddings resulting from global document pooling. This method selectively enhances entity pair embeddings by leveraging attention mechanisms pre-trained within transformer models. Essentially, it aligns attention to contexts within the document that are most pertinent to the entities under consideration, thus solving the intrinsic multi-entity problem. This is achieved by multiplying attention weights derived from the subject and object entities to identify the shared context crucial for extracting relationships.

Empirical evaluations on various datasets illustrate the superiority of the ATLOP (Adaptive Thresholding and Localized cOntext Pooling) model. This model outperformed predecessor models such as BERT-based baselines and graph-based models by a considerable margin. More specifically, when tested on the DocRED, CDR, and GDA benchmarks, the model delivered improved F1 scores, indicating robust handling of both multi-entity and multi-label challenges.

The findings proposed in this work have practical implementations that extend to applications requiring nuanced information extraction, such as large-scale text mining and knowledge graph construction. The demonstrated improvement points towards transforming how document-level relation extraction is conceptualized, particularly concerning handling complex interrelations within expansive text bodies.

However, with these advancements, the implications for future developments in AI-driven information extraction are notable. Embracing variations of adaptive thresholding and context-specific attention pooling could lead towards more sophisticated architectures capable of reasoning across multifaceted datasets. Furthermore, potential refinements in localized pooling mechanisms may provide additional insights into minimizing model errors in relation-heavy domains.

In conclusion, this paper introduces a substantial enhancement to the document-level RE paradigms, achieving notable improvements using adapted techniques. Its methods pave the way for more nuanced explorations within the field, presenting avenues for deepening AI's capability in contextual comprehension of textual data at the document level.