Overview of the Original Chinese Natural Language Inference (OCNLI) Dataset

The paper "OCNLI: Original Chinese Natural Language Inference" introduces the first large-scale, human-elicited natural language inference (NLI) dataset dedicated to the Chinese language. This work addresses the notable gap in Chinese NLI resources, offering a corpus that does not rely on automatic translation from English datasets like SNLI or MNLI, which has been a common yet flawed approach in extending NLI tasks to non-English languages.

Dataset Composition and Methodology

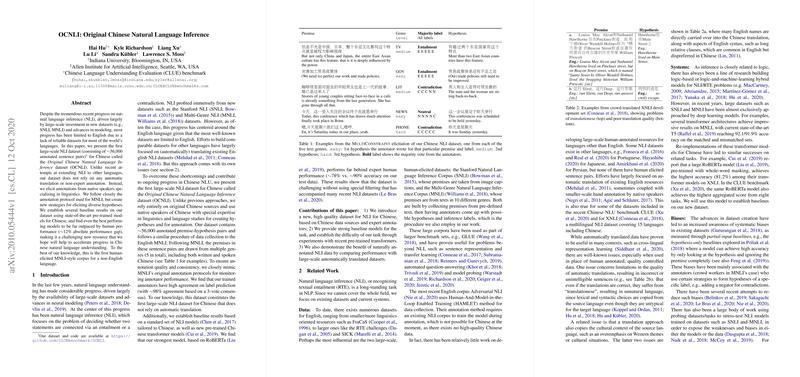

OCNLI comprises approximately 56,000 annotated premise-hypothesis pairs, demonstrating a rigorous data collection and annotation process. These pairs are derived from five genres: government documents, news, literature, TV talk shows, and telephone conversations. This multi-genre approach mirrors the methodology employed in the development of MNLI, aiming to present diverse linguistic challenges.

Significantly, the dataset's annotations are made by native Chinese speakers with linguistic expertise, ensuring a high standard of quality and cultural relevance. The paper points out that native speaker involvement contrasts with previous attempts using automatic translations, which often suffer from translationese—linguistic patterns uncharacteristic of the target language—and cultural biases.

Novel Approaches in Data Annotation

The authors innovate beyond the MNLI protocol by implementing a multi-hypothesis elicitation strategy, where annotators generate multiple hypotheses per premise-label pair. This approach aims to capture a broader range of inferential diversity and complexity, thus mitigating potential biases that could arise from simpler, more predictable sentence constructions found in other datasets. The experiments confirm that annotators can generate reliable data under this framework, maintaining high inter-annotator agreement rates.

Baseline Establishment and Performance Analysis

The paper evaluates several NLI models on the OCNLI dataset, including non-transformer models (CBOW, biLSTM, ESIM) and state-of-the-art transformer models (BERT and RoBERTa). Results indicate that RoBERTa achieves the highest performance, though it still lags significantly behind human performance by approximately 12 percentage points (78.2% vs. 90.3%). This gap underscores the dataset’s challenge and the potential room for improvement in Chinese-LLMs.

A further comparison between models trained on OCNLI versus the translated XNLI dataset highlights OCNLI's efficacy. Models trained on OCNLI demonstrate superior results, providing evidence of the dataset’s higher quality and the benefits of native-language, human-annotated data over translated resources.

Implications and Future Directions

OCNLI represents a critical step in the improvement of Chinese natural language understanding tasks and the development of more robust models. The dataset's availability promises to catalyze advancements not only in performance metrics but also in the methodologies used to create datasets for other languages.

Future directions indicated in the paper include the exploration of adversarial filtering and learning to further refine dataset fidelity and model performance, acknowledging known biases such as hypothesis-only biases prevalent in NLI datasets. Moreover, OCNLI sets a foundation for probing sentence representations, transfer learning, and bias-reduction strategies in Chinese NLU, encouraging a new wave of research that respects linguistic and cultural specificity.

In summary, the introduction of OCNLI provides a necessary resource that enriches the field of Chinese NLU and serves as a benchmark against which future models can be trained and evaluated, fostering continued progress in AI research across diverse languages.