A Professional Overview of "CodeBLEU: a Method for Automatic Evaluation of Code Synthesis"

The paper "CodeBLEU: a Method for Automatic Evaluation of Code Synthesis" addresses a critical need within the domain of code synthesis. It proposes a new evaluation metric tailored to capturing the unique syntactic and semantic intricacies inherent to programming languages. The authors argue, with supporting evidence, that traditional language-processing metrics like BLEU fall short in this context due to fundamental differences between natural and programming languages. The proposed metric, CodeBLEU, seeks to augment the traditional BLEU score with additional layers that account for the distinct structural and semantic elements of code.

Evaluation Challenges in Code Synthesis

The authors identify three main shortcomings of existing evaluation metrics when applied to code synthesis:

- N-gram Based BLEU Scores: While BLEU is effective for natural language, it fails to account for structural and logical aspects of programming languages. It emphasizes surface-level token matching, which may overlook syntactic errors or logical inaccuracies.

- Perfect Accuracy: This metric is overly stringent as it demands exact matches, underestimating functional equivalency due to minor syntactic or stylistic variations.

- Computational Accuracy: Although this assesses the functional behavior of code, it lacks general applicability across different languages and is dependent on specific runtime environments and inputs.

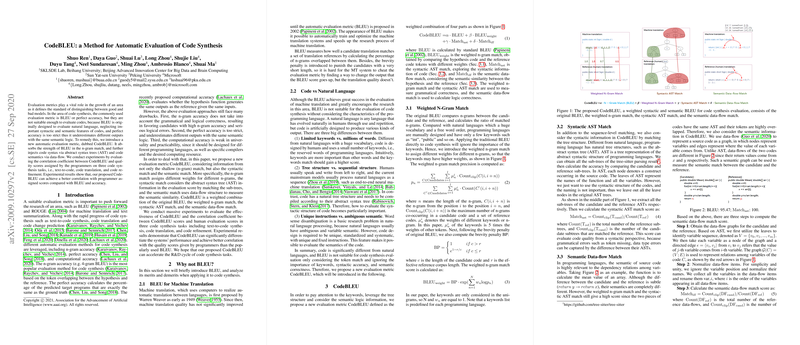

The CodeBLEU Metric

CodeBLEU enhances the traditional BLEU score by incorporating the following components:

- Weighted N-Gram Match: This feature adjusts the traditional n-gram matching with higher weights for critical programming keywords, reflecting their importance in code functionality.

- Syntactic AST Match: By comparing the abstract syntax trees (ASTs) of the candidate and reference codes, CodeBLEU evaluates syntactic correctness, capturing structural errors that n-gram matches may miss.

- Semantic Data-Flow Match: This component measures functional accuracy through data-flow analysis, reflecting the logical consistency of the code beyond mere token overlaps.

Empirical Validation

The authors validate the effectiveness of CodeBLEU through experiments on text-to-code synthesis, code translation, and code refinement tasks. Empirical results highlight that CodeBLEU correlates more closely with human assessments than previous benchmarks. This demonstrates its capacity to capture both syntactic and semantic correctness, offering a robust means to evaluate code generation systems. Furthermore, the paper reports statistically significant differences among various systems’ scores, confirming CodeBLEU's reliability.

Implications and Future Directions

The introduction of CodeBLEU has noteworthy implications for the evaluation of AI in programming tasks. By providing a more comprehensive assessment tool, research and development in code synthesis can progress with metrics that reflect true performance, rather than mere surface similarity. This might accelerate advancements in this area and improve automated coding systems' alignment with human logic and syntax.

Looking ahead, there is potential for further refinement of CodeBLEU, especially in enhancing the semantic analysis for more nuanced code structures. Additionally, exploring its applicability in diverse programming environments and languages could solidify its use as a standard evaluation tool in code synthesis. Such developments could further bridge the gap between human and machine understanding in programming contexts, fostering more sophisticated and reliable AI systems.