Overview of SemEval-2020 Task 12: Multilingual Offensive Language Identification in Social Media (OffensEval-2020)

The paper presents the results and methodology of SemEval-2020 Task 12, known as OffensEval-2020, which focused on the identification of offensive language in social media across multiple languages. Building on the framework established in OffensEval-2019, the task extended the research scope by introducing four new languages—Arabic, Danish, Greek, and Turkish—alongside English. The framework utilized the OLID schema’s hierarchical approach for offensive language annotation.

Task Formulation

OffensEval-2020 was structured into three subtasks per OLID's taxonomy for the English language and an overarching subtask for other languages:

- Subtask A: Offensive language identification.

- Subtask B: Categorization of offense types into targeted or untargeted.

- Subtask C: Identification of the offense target, whether an individual, group, or other entities.

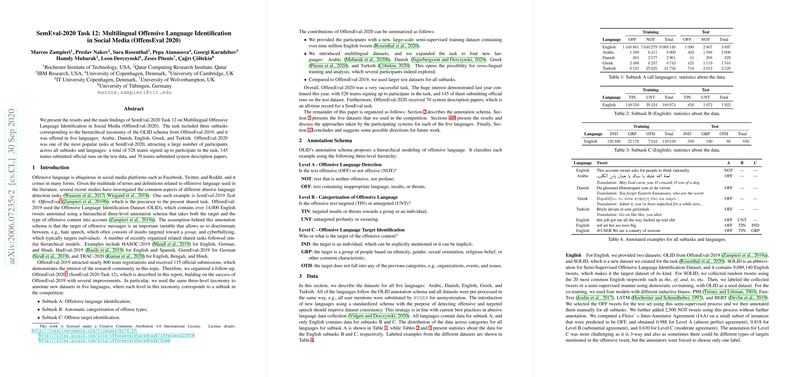

The task attracted substantial interest, with 528 registered teams, 145 of which submitted results. Moreover, 70 teams contributed system description papers.

Methodological Framework

The datasets utilized included a new semi-supervised dataset, SOLID, which offered over nine million English tweets, and multilingual datasets adhering to the OLID schema. Participants employed an array of machine learning models, with pre-trained Transformer models such as BERT and its variants, playing a central role. Numerous systems also explored cross-lingual approaches leveraging the multilingual setup.

Results and Performance

- Subtask A (English): The best performing model achieved an F1 score of 0.9204, primarily through Transformer ensembles. Notably, the competition saw a tight clustering of high F1 scores, indicating strong overall performance across submissions.

- Subtask B (English): The top model in this subtask recorded an F1 score of 0.7462 using a teacher-student architecture, showcasing the complexity and variety of approaches used.

- Subtask C (English): With an F1 score of 0.7145, the leading team also utilized knowledge distillation techniques, underlining a preference for sophisticated deep learning methods.

For the multilingual subtasks:

- Arabic, Danish, Greek, Turkish: The Arabic highest F1 score was 0.9017, Danish at 0.8119, Greek at 0.8522, and Turkish at 0.8258. The results highlight the potential of multilingual datasets and architectures, with most high-performing teams using Transformer-based models.

Implications and Future Directions

The strong performance across languages demonstrates the viability of multilingual offensive language identification and the efficacy of using large pre-trained models coupled with domain-specific fine-tuning. The successful integration of multiple languages offers promising avenues for further research into cross-lingual learning and domain transferability.

Future iterations of the task could explore underrepresented languages, the challenge of code-switching, and the dynamics across various social media platforms. Expanding the subtasks for non-English languages and improving datasets' richness and diversity could significantly contribute to advancing multilingual natural language processing.

In conclusion, OffensEval-2020 effectively advanced the field of abusive language detection by leveraging multilingual resources and complex model architectures, setting a foundation for future developments in addressing online offensive content across languages.