Overview of Active Preference-Based Gaussian Process Regression for Reward Learning

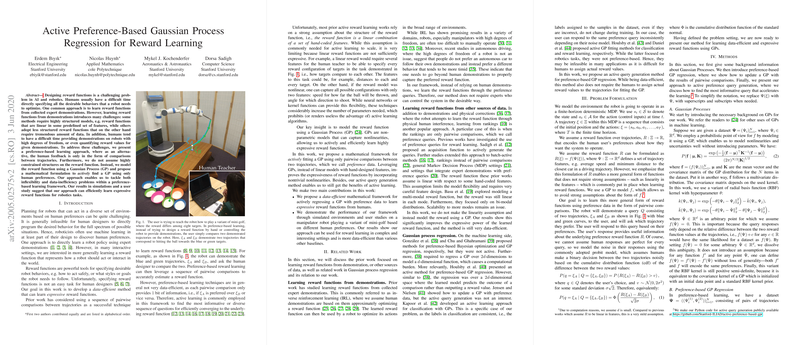

In the paper titled "Active Preference-Based Gaussian Process Regression for Reward Learning," the authors address a fundamental challenge in AI and robotics: the design of reward functions to guide desired robot behaviors. Traditional approaches often rely on structured models or require extensive data, which are not always viable due to the complexities involved in controlling robots with high degrees of freedom or quantifying reward values for demonstrations. The authors propose an innovative preference-based learning framework leveraging Gaussian Processes (GPs) to address these challenges effectively.

Core Contributions

The authors contribute to the field of reward learning through two primary innovations:

- Data-Efficient GP Framework: The paper introduces a mathematical framework to actively fit GPs using preference data gathered through pairwise comparisons of trajectories. This approach eschews the need for demonstrations or structured reward function assumptions, improving expressiveness and data efficiency.

- Empirical Validation: The proposed framework is validated through simulations and a user paper involving a manipulator robot performing a mini-golf task. The results suggest that the GP-based model captures complex reward functions more effectively and requires less data compared to traditional linear reward models.

Key Results

The empirical studies highlight several noteworthy results:

- Expressiveness: The GP model outperforms linear models in capturing complex, nonlinear reward configurations. When tested with both linear and polynomial reward functions, the GP model demonstrated superior adaptability and learning capability.

- Data Efficiency: By incorporating active query strategies, the GP model significantly reduced the data requirement to achieve comparable or superior performance relative to random query strategies.

- User Acceptance: In user studies, the GP-based approach yielded higher prediction accuracies and received more favorable feedback on task completion, signifying a closer alignment with human preferences.

Implications and Future Directions

The approach presented in this paper has significant implications for both practical applications and theoretical advancements in AI and robotics:

- Enhanced Human-Robot Interaction: By relying on preference data, the method aligns more closely with human intuitions and eases the specification of complex behaviors without reliance on extensive demonstrations.

- Scalability and Flexibility: The use of GPs allows the method to scale beyond the constraints typical of linear models, providing a robust mechanism for capturing nonlinearities intrinsic to real-world tasks.

- Future Research: Potential extensions include exploring learning from more complex input data such as rankings, integrating user uncertainty into the model, and developing strategies for feature learning in parallel with reward learning. Additionally, addressing computational challenges associated with high-dimensional spaces remains an open area for improvement.

In conclusion, the paper presents a significant step forward in leveraging Gaussian Processes for learning reward functions based on human preferences. The ability to actively and efficiently learn expressive models opens new avenues for robotic applications, enhancing their capability to understand and act according to nuanced human intents.