An Overview of SpellGCN: Enhancing Chinese Spelling Correction with Phonological and Visual Similarities

The paper presents SpellGCN, an innovative approach to improving Chinese Spelling Check (CSC) by integrating phonological and visual similarity knowledge into LLMs. Recognizing that misspelled Chinese characters often share phonetic or visual features with their correct counterparts, SpellGCN leverages these similarities using a graph convolutional network (GCN) to enhance the performance of CSC tasks.

Methodology

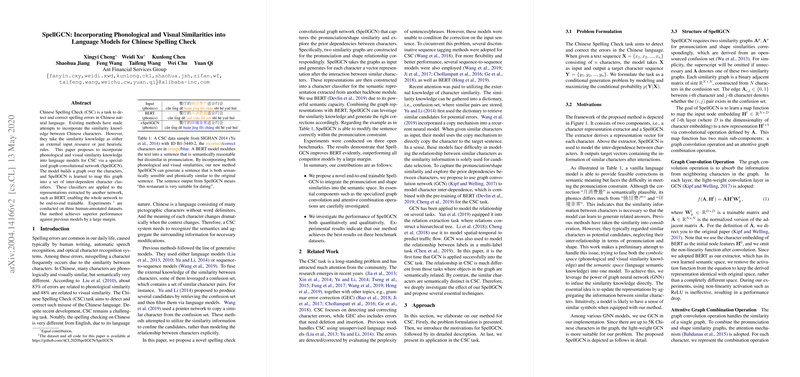

SpellGCN stands out by modeling character similarities explicitly rather than treating them as heuristic rules or solely relying on confusion sets. This model constructs two distinct similarity graphs which are representative of pronunciation and shape correspondences between characters. These graphs feed into a specialized GCN to yield vector representations that are subsequently used to inform the decision-making processes of character classifiers built on top of LLMs like BERT. The semantic representations extracted from BERT are adjusted and refined through interactions with SpellGCN, a process that inherently supports the end-to-end trainability of the model.

Experimental Results

The efficacy of SpellGCN is demonstrated through experiments on three human-annotated datasets. Notably, the model showcases a significant performance improvement over existing models, with substantial gains in detection and correction accuracy. The numerical results underscore the value of incorporating phonological and visual knowledge, with SpellGCN consistently achieving superior F1 scores across various metrics compared to BERT without SpellGCN and other baseline models.

Theoretical and Practical Implications

SpellGCN's architecture aligns with the necessity of bridging semantic and symbolic understanding in natural language processing tasks. This model exemplifies how domain-specific features—characterized by phonological and visual characteristics in Chinese—can inform and enhance NLP models traditionally focused on semantic embeddings. By adopting this method, models like SpellGCN can enhance CSC performance, potentially extending to grammar error corrections in other linguistic contexts that share non-alphabetic characteristics with the Chinese language.

Future Directions

The success of SpellGCN in the Chinese language domain suggests that similar methodologies could yield improvements in error correction systems for other languages, especially where character-based scripts are predominant. Furthermore, the integration of other forms of similarity or prior knowledge through adaptable GCN frameworks opens avenues for future research into multilingual error correction systems and syntactic consistency models.

Conclusion

This paper puts forth a compelling case for the integration of domain-specific knowledge into the field of LLMs, specifically targeting the intricacies of Chinese spelling correction. SpellGCN takes a leading role in accurately and efficiently rectifying spelling errors by capitalizing on closely related character similarities, establishing a foundation for advanced CSC systems and potentially broader applications in the area of AI-driven language processing.