Audio-Visual Instance Discrimination with Cross-Modal Agreement

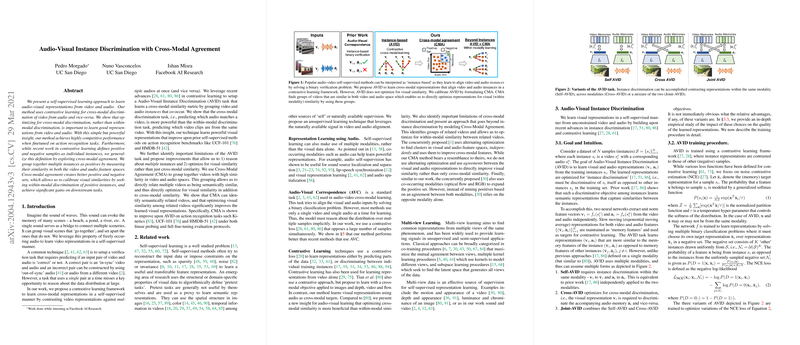

This paper introduces a novel self-supervised learning framework designed to derive audio-visual representations from video and audio data, leveraging the efficacy of cross-modal contrastive learning. The methodology presented deviates from conventional within-modal discrimination approaches, focusing instead on cross-modal discrimination to cultivate robust audio-visual feature representations. Drawing on the paradigm of contrastive learning, the authors devised two key innovations: Audio-Visual Instance Discrimination (AVID) and Cross-Modal Agreement (CMA).

The cornerstone of this research is the AVID framework, which is constructed around the premise of differentiating video instances using audio data, and vice versa. This is achieved through a contrastive loss mechanism, a technique drawing from recent advances in representation learning that optimizes the model to better align the audio-visual representations between concurrent video and audio tasks. Unlike some existing methods that treat audio-visual pairs as independent single instances, AVID's framework allows for a richer understanding of the relationship between modalities by generalizing samples into groups through cross-modal agreement.

A significant focus of the paper is the empirical demonstration that cross-modal discrimination is more beneficial than within-modal approaches in learning representations from video and audio. This insight is realized through systematic evaluations against common action recognition datasets such as UCF-101 and HMDB-51, where the proposed model presents superior performance compared to previous methods.

Enhancing AVID, the authors propose CMA, which rectifies certain limitations inherent in instance discrimination approaches, such as false negative sampling and the absence of within-modal calibration. CMA strategically utilizes audio-visual co-occurrence to form groups of related video instances and optimize both cross-modal and within-modal tasks, thereby refining the quality of the audio-visual representations.

The experimental setup spans evaluations using large-scale datasets, including Kinetics and Audioset, with performances quantitatively assessed through downstream tasks of action and sound recognition. The results indicate that both AVID and CMA methodologies offer substantial improvements in the robustness and transferability of learned representations, solidifying the position of this approach as a state-of-the-art technique.

This research implies promising developments for self-supervised multi-modal learning, with potential applications extending into fields such as video understanding, multi-modal fusion technologies, and autonomous systems. Prospective work could explore enhancements through extending the cross-modal agreement to other domains or testing the frameworks on more varied datasets to further validate and realize generalized models for real-world applications. The findings of this paper underscore the effectiveness of deploying contrastive learning principles in multi-modal scenarios and suggest an avenue for future AI systems to exploit complex multi-modal interactions more intuitively and effectively.