Exploring the Longformer: A Transformer Model for Handling Long Documents

Introduction to Longformer

Modern NLP tasks often bump against the limitations set by traditional transformer models, namely the quintessential BERT and its ilk, rooted deeply in the self-attention mechanism that unfortunately scales quadratically with sequence length. This puts a strain on processing long documents effectively—a challenge that the Longformer addresses.

Key Features of Longformer

Attention Mechanism

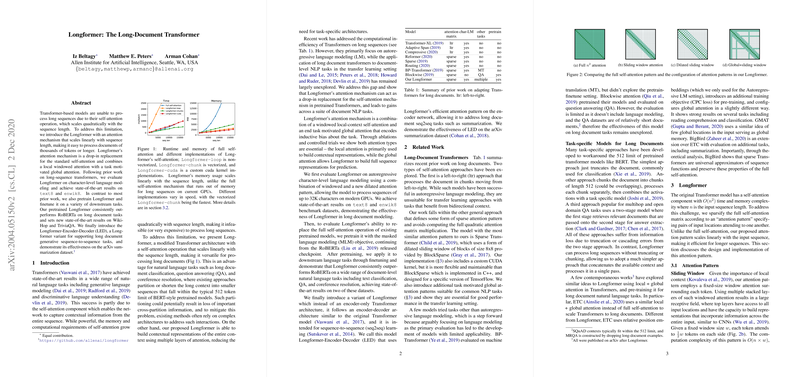

The heart of Longformer's innovation lies in its attention mechanism which combines sliding window attention with global attention. This hybrid approach not only captures the local context around each token via the windowed attention but also embeds task-relevant global information seamlessly. The brilliance of this design is that it maintains a linear computational cost with respect to the input length. Thus, handling extensive documents becomes feasible without a substantial compute penalty.

Performance on Benchmarks

Remarkably, the Longformer demonstrates its prowess by setting new performance benchmarks on tasks like character-level LLMing on the text8 and enwik8 datasets. Moreover, it outstrips RoBERTa—a well-respected model in document-level tasks—indicating not only its efficiency but also its efficacy in managing longer texts.

Bringing it into Context

Longformer's architecture isn't just about handling longer texts; it's about understanding them better. For instance, its ability to integrate broad document contexts helps in tasks like question answering and summarization, where nuances spread across a document can determine the accuracy of the output. By not truncating or excessively partitioning the data, Longformer retains narrative threads that might otherwise get clipped.

Longformer in Application

- LLMing: Demonstrated by its state-of-the-art results in both the text8 and enwik8 datasets.

- Downstream NLP Tasks: Extensive tests show superior performance in long document classification, question answering, and coreference resolution compared to baseline models like RoBERTa.

Longformer-Encoder-Decoder (LED)

Branching from its main architecture, the Longformer also introduces a variant designed specifically for generative tasks—the Longformer-Encoder-Decoder (LED). This model variation adapts the efficient Longformer attention mechanisms for both encoding and decoding processes. It particularly shines in the field of long document summarization, demonstrated by its robust results on the challenging arXiv dataset.

Look Towards the Future

There's fertile ground for further enhancements:

- Experimenting with alternative pretraining objectives could yield richer or more focused contextual embeddings.

- Expanding the maximal sequence length can push the boundaries on what types of documents or datasets the Longformer can tackle.

- Broadening the scope to other NLP tasks that involve large chunks of text could further cement Longformer's utility in real-world applications.

Wrapping Up

In essence, the Longformer presents a powerful modification to the Transformer model, breaking barriers that have traditionally hampered the processing of extensive textual data. By smartly amalgamating local and global attention mechanisms, it offers a scalable, effective solution for a broad spectrum of NLP tasks, setting new benchmarks and widening the possibility landscape for document-level understanding and beyond. Its adaptations like the LED further showcase its versatility and potential in sequence-to-sequence tasks, marking it as a significant step forward in the evolution of document-aware models.