X-Linear Attention Networks for Image Captioning

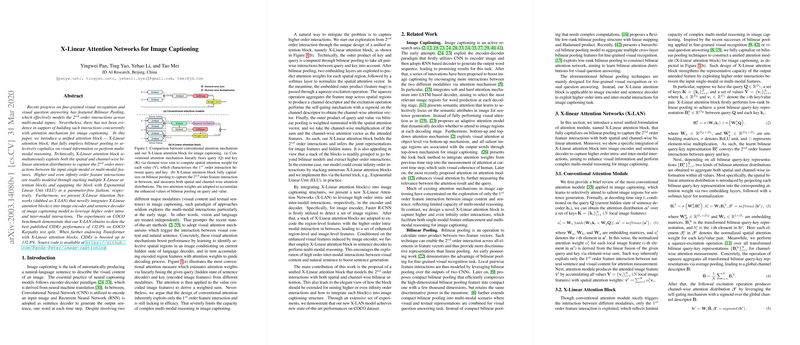

The paper "X-Linear Attention Networks for Image Captioning" introduces a novel approach to image captioning by integrating X-Linear attention blocks that effectively model higher-order interactions among input features. These blocks use bilinear pooling to facilitate both intra- and inter-modal interactions in image captioning, yielding substantial improvements over existing techniques.

In image captioning, the goal is to automatically generate descriptive sentences for given images, drawing parallels from neural machine translation. This task traditionally uses an encoder-decoder framework, where a Convolutional Neural Network (CNN) encodes the visual input, and a Recurrent Neural Network (RNN) generates the descriptive output. Although existing methods have adopted visual attention mechanisms to enhance the interaction between visual and textual modalities, these often only utilize linear, first-order interactions. The authors suggest that such approaches may overlook the complex dynamics required for effective multi-modal reasoning.

The core of the authors’ contributions lies in the design of the X-Linear attention block, which computes second-order interactions through bilinear pooling. This approach captures pairwise feature interactions both spatially and across channels. Furthermore, by compositing multiple X-Linear blocks and integrating them with Exponential Linear Units (ELU), the network is capable of modeling higher and infinity order interactions, thus enhancing its representational capacity.

Experimentally, the X-Linear Attention Networks (X-LAN) were evaluated on the COCO dataset and demonstrated superior performance, achieving a CIDEr score of 132% on the COCO Karpathy test split. This performance is notable compared to models utilizing conventional attention mechanisms. Additionally, embedding X-Linear attention blocks into Transformer architectures yielded a further improvement to a CIDEr score of 132.8%, indicating the transferability of this approach to different architectural paradigms.

The implications of this research are multifaceted. Practically, the integration of higher-order interactions in the attention mechanism results in more representative and contextually aware captioning outputs, potentially benefiting applications in automated content creation, accessibility, and multimedia processing. Theoretically, this work challenges existing paradigms by proposing that higher-order interactions are crucial for neural multimedia understanding tasks, opening avenues for further research into more sophisticated modeling techniques in deep learning.

Future developments may explore the scalability of this approach to even larger datasets or its application to other domains requiring sophisticated multi-modal reasoning, such as video captioning or audio-visual processing. Additionally, the integration of such attention mechanisms with emerging architectures could reveal further synergies, fostering advancements in AI comprehension and generation tasks. The open-sourcing of the code further encourages collaboration and experimentation within the research community, advancing the frontier of image captioning technologies.