An Analytical Overview of "Towards Accurate Scene Text Recognition with Semantic Reasoning Networks"

The paper "Towards Accurate Scene Text Recognition with Semantic Reasoning Networks" introduces a novel framework aimed at improving the accuracy of scene text recognition by effectively integrating semantic reasoning into the recognition process. The core contribution of this work is the development of the Semantic Reasoning Network (SRN), which integrates global semantic context to enhance the recognition capabilities of optical character recognition (OCR) systems.

Key Contributions and Technical Insights

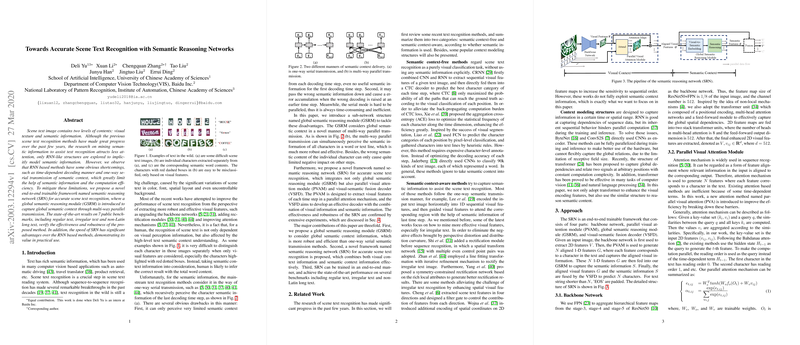

- Semantic Reasoning Network (SRN): The SRN framework is designed as an end-to-end trainable system that shifts from traditional sequence-to-sequence models reliant on Recurrent Neural Networks (RNN) to a more efficient paradigm utilizing a Global Semantic Reasoning Module (GSRM). This module captures semantic contexts efficiently through multi-way parallel transmission, which contrasts sharply with the time-dependent RNN model architectures.

- Global Semantic Reasoning Module (GSRM): The authors address the problem of limited semantic context modeling in existing frameworks by proposing the GSRM. This feature overcomes the inherent limitations of RNN-based models, such as sequential dependency and inefficiency, and offers a robust method to capture comprehensive semantic contexts. The SRN approach leverages transformer-based units to achieve this.

- Parallel Visual Attention Module (PVAM): To better align visual features with semantic context during recognition, the authors propose a PVAM that facilitates parallel processing across character positions. This module improves feature extraction efficiency by eliminating time-dependent barriers, resulting in significant improvements in processing speed and accuracy.

- Visual-Semantic Fusion Decoder (VSFD): The VSFD combines visual and semantic information harmoniously through a gated fusion mechanism, which dynamically balances the contributions from both domains during the decoding process. This fusion ensures that the final predictions consider both the intrinsic visual characteristics and contextual semantic cues.

- Robust Experimental Evaluation: The authors validated their approach on multiple benchmarks, including datasets with regular, irregular, and non-Latin text. Their experiments demonstrated state-of-the-art performance across a variety of conditions and languages, particularly noting improvements on datasets such as ICDAR 2013, ICDAR 2015, and IIIT 5K-Words.

Numerical Results and Practical Implications

The numerical results indicate that the SRN outperforms previous methods on several tough benchmarks, achieving state-of-the-art accuracy while handling a wider range of text variations, such as distortions and long non-Latin scripts. The ability to train the model in an end-to-end fashion simplifies deployment and offers significant practical utility in real-world applications, particularly in scenarios like autonomous driving and multilingual content analysis.

Theoretical and Future Implications

The introduction of transformer-based semantic reasoning within the OCR domain represents a significant advancement in scene text recognition. The multi-way parallel transmission inherently proposed by the GSRM is a key theoretical development that may influence future research directions, not only in text recognition but also in any sequence modeling tasks where capturing global context is crucial. The authors hint at extending these ideas to more LLMs and assessing the efficiency of GSRM in various computational settings could offer promising pathways for further refinement and application.

In conclusion, this paper provides a detailed examination of a novel methodology that integrates semantic reasoning into scene text recognition, delivering noteworthy improvements over traditional methods. With its substantial empirical results and theoretical advancements, the work positions itself as a significant contribution to both the fields of computer vision and natural language processing.