An Overview of PhoBERT: Vietnamese Pre-trained LLMs

The paper presents PhoBERT, a set of monolingual pre-trained models tailored for Vietnamese, marking a significant advancement in the field of Vietnamese NLP. PhoBERT is introduced in two configurations: PhoBERT\textsubscript{base} and PhoBERT\textsubscript{large}, both of which are designed to improve upon existing multilingual models by leveraging a corpus that is specific to the Vietnamese language.

Background and Motivations

The development of pre-trained models has substantially enhanced the performance of NLP tasks across various languages, predominantly for English, owing to models like BERT. However, such successes have not been universally translated to Vietnamese due to a limitation in the availability and scope of language-specific pre-training datasets. Multilingual models, while useful, have been noted to underperform when compared to models specifically trained on a particular language corpus.

For the Vietnamese language, two primary issues are identified: the limited size of the available Vietnamese corpus and the inadequacy of syllable-level tokenization techniques used in prior models. The Vietnamese language's syntax involves white space usage that complicates token separation at the syllable level, impacting models trained using those datasets.

Methodology

The authors of PhoBERT address these challenges by constructing a more comprehensive 20GB dataset that merges the Vietnamese Wikipedia corpus and a large Vietnamese news corpus. Essential text preprocessing, including word segmentation using RDRSegmenter from VnCoreNLP, precedes the application of the fastBPE algorithm. This allows the model to better understand the structure inherent to Vietnamese language data by focusing on word-level language representation.

Utilizing the BERT architecture optimized with RoBERTa's training protocols, PhoBERT employs a pre-training strategy fine-tuned to Vietnamese with a maximum sequence length and batch configurations adapted for efficiency during training over distinct lengths of epochs for each model variant.

Results and Implications

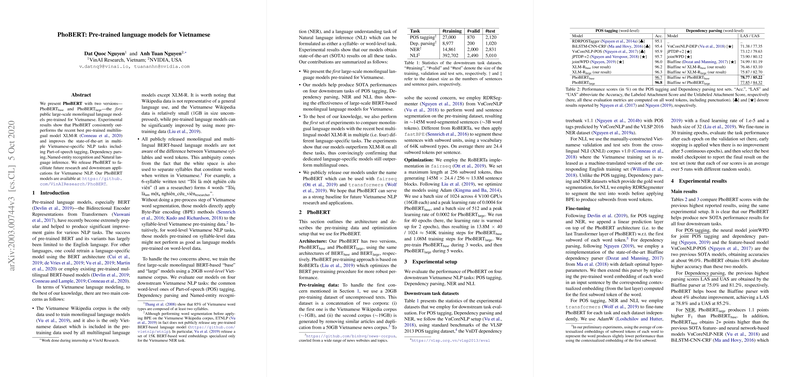

PhoBERT is rigorously compared to both existing state-of-the-art Vietnamese NLP solutions and the multilingual XLM-R model across tasks including POS tagging, Dependency parsing, Named-entity recognition (NER), and Natural language inference (NLI). The results demonstrate PhoBERT's significant rise in performance across these tasks, establishing new benchmarks and outperforming XLM-R in setting dedicated to Vietnamese language utilization.

Notably, the dependency parsing results suggest potential for further exploration into layer-specific representation of syntactic information within BERT-like architectures. This aspect could influence future adjustments to layer utilization strategies in NLP model design.

Future Developments

The release of PhoBERT provides a robust baseline for further research into Vietnamese NLP. By illustrating the advantages of monolingual-specific pre-training, this work encourages exploration into similar models for other underrepresented languages. Additionally, PhoBERT paves the way for potential commercial and academic applications, promising enhanced tools and resources for Vietnamese language technology.

The ongoing development of PhoBERT suggests further refinements and improved techniques for language-specific models. It opens avenues for extensive research into the integration of more diverse text corpora to align machine learning initiatives with natural language comprehension across varied linguistic landscapes. Researchers are prompted to explore further improvements and adaptations of similar methodologies to other regional and minority languages, leveraging the foundational work presented by PhoBERT.