Essay on "CrossWOZ: A Large-Scale Chinese Cross-Domain Task-Oriented Dialogue Dataset"

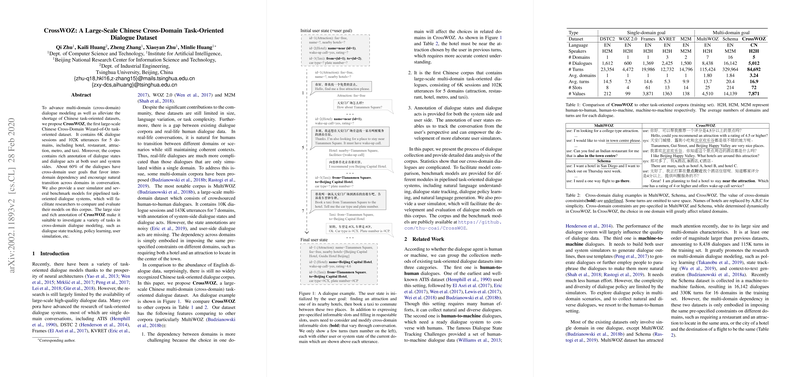

The paper "CrossWOZ: A Large-Scale Chinese Cross-Domain Task-Oriented Dialogue Dataset" introduces a substantial contribution to the domain of task-oriented dialogue systems by presenting CrossWOZ, the first extensive Chinese multi-domain dialogue dataset. This dataset comprises 6,000 dialogue sessions and 102,000 utterances covering five distinct domains: hotel, restaurant, attraction, metro, and taxi. Its unique emphasis lies in its capacity to simulate real-world conversational transitions across different domains, making it an invaluable resource for studying cross-domain dialogue modeling.

Dataset Characteristics

CrossWOZ delineates itself by providing detailed annotations of dialogue states and dialogue acts on both user and system sides, a feature that distinctly augments its utility for various tasks such as dialogue state tracking and policy learning. A significant portion, approximately 60%, of the dialogues exhibit cross-domain user goals that necessitate inter-domain dependency and natural transitional flow, thus presenting enhanced complexity compared to single-domain dialogue datasets.

The dialogue corpus is collected through a well-structured human-to-human interactive setup. With a controlled synchronous dialogue setting, the workers were guided through iterative training to ensure natural, coherent dialogues. Each conversation involved a system-representative (wizard) and a user, enabling the collection of accurate and informative dialogue sequences.

Methodology and Data Analysis

The dataset construction followed a meticulous process, beginning with database creation, followed by goal generation which incorporated a variety of cross-domain constraints, thus capturing dependencies between domain choices. The dialogue collection was methodically controlled to ensure comprehensive coverage of user goals. By providing explicit user and system state annotations, CrossWOZ allows for a deeper understanding of the task-complexity involved in natural conversational systems.

Statistical analysis reveals the dataset's complexity, with an average dialogue extending over 16.9 turns and incorporating over three sub-goals. Such statistics posit CrossWOZ as more intricate than its predecessors, notably MultiWOZ, suggesting a higher complexity that is reflective of real-world interactions.

Benchmarking and Results

CrossWOZ was tested through a series of benchmark models covering various components of dialogue systems such as natural language understanding (NLU), dialogue state tracking (DST), and dialogue policy learning. The performances indicate challenges particularly in handling cross-domain dependencies, with varying success across simpler and more complex dialogue instances. The state-of-the-art DST model, TRADE, displayed limitations, hinting at the need for more robust models tailored to cross-domain handling.

Additionally, dialogue policy learning and natural language generation benchmarks were conducted, showcasing the intricate nature of multi-domain dialogue transitions. Results indicated that traditional models faced noticeable difficulty in capturing domain transitions and cross-domain constraints, emphasizing areas for improvement.

Implications and Future Directions

The introduction of CrossWOZ offers substantial implications for both practical applications and future research in AI. Practically, it provides a benchmark for evaluating cross-domain dialogue systems in Chinese, an area previously underrepresented. Theoretically, its complex structure fosters the development of advanced algorithms capable of better understanding and managing cross-domain dialogue dynamics.

Future developments could focus on enhancing DST models to more effectively address cross-domain challenges, leveraging deeper context integration, and improving NLU components for better accuracy in multi-domain settings. Continued exploration of reinforcement learning techniques for training dialogue policies on CrossWOZ data could also yield beneficial outcomes.

In summary, CrossWOZ presents an essential resource for advancing task-oriented dialogue systems, particularly in multilingual contexts. It sets the groundwork for subsequent innovations in effective dialogue management across diverse and intersecting domains.