Sparse Sinkhorn Attention: An Efficient Approach to Attention Mechanisms

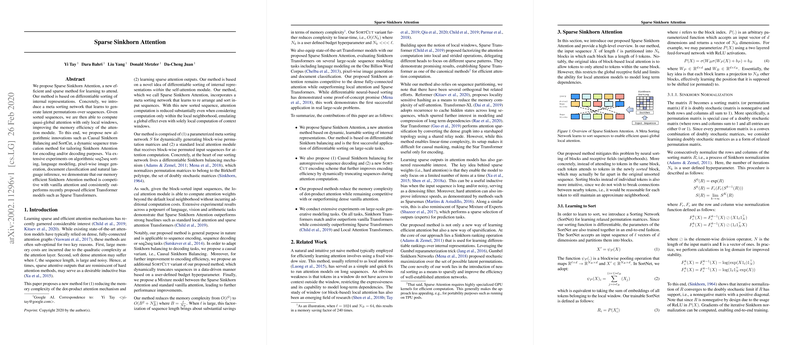

The paper "Sparse Sinkhorn Attention" addresses the problem of learning sparse and efficient attention mechanisms, a topic that has gained traction due to the limitations of traditional attention models. The authors propose a novel method called Sparse Sinkhorn Attention which is designed to enhance memory efficiency and learn sparse attention outputs. By introducing differentiable sorting of internal representations, the approach seeks to reduce the quadratic memory complexity inherent in standard dense attention mechanisms.

Core Contributions

The primary innovation of this work is the incorporation of a meta sorting network that generates latent permutations over sequences, enabling computation of quasi-global attention within local windows. This reformation is achieved through the utilization of a parameterized meta sorting network that dynamically creates block-wise permutation matrices, using a differentiable Sinkhorn balancing mechanism. Such an arrangement belongs to the Birkhoff polytope, allowing the construction of doubly stochastic matrices that facilitate sorting.

Additionally, the paper introduces auxiliary techniques such as Causal Sinkhorn Balancing for autoregressive sequence decoding and a SortCut variant that dynamically truncates sequences for improved encoding efficiency. By leveraging these innovations, the authors assert that their approach significantly outperforms existing efficient models such as Sparse Transformers, and demonstrates competitive performance relative to standard dense attention mechanisms.

Numerical Results

The extensive empirical evaluation spans across various domains, encompassing tasks like LLMing, algorithmic sequence sorting, image generation, and document classification. Key results include notable improvements in LLMing tasks, wherein the Sparse Sinkhorn Transformers exhibit reduced perplexity scores compared to baseline local attention and Sparse Transformer models. Similarly, in image generation tasks, the proposed method achieves lower bytes per dimension (Bpd) compared to conventional Transformer and Sparse Transformer baselines.

Theoretical and Practical Implications

The theoretical implications of this work extend to the enhancement of sparsity and memory efficiency within attention mechanisms. By integrating neural sorting, Sparse Sinkhorn Attention proposes a refined approach that balances local and global attentions through block-wise permutations, thus addressing long-standing issues of computational inefficiency in handling lengthy sequences.

On the practical front, the reduced memory complexity from to (with an optional SortCut bringing it down to linear-time complexity) provides substantial scalability benefits, facilitating deployment in resource-constrained settings or applications requiring extensive sequence handling. As transformer models continue to play pivotal roles in machine learning tasks, such efficiency improvements will undoubtedly contribute to their broader applicability and advancement.

Future Developments

Looking ahead, the demonstrated success of Sparse Sinkhorn Attention encourages further exploration into its scalability and adaptability across diverse tasks. Potential future directions include the refinement of the meta sorting network to enhance learning efficacy across varying sequence structures or the integration with other neural architectures beyond transformers. Additionally, the exploration of finer-grained sorting or sparsification schemes could further augment the method's performance and efficiency.

In conclusion, the introduction of Sparse Sinkhorn Attention presents a promising advancement in the design of efficient attention mechanisms, paving the path towards more scalable and capable machine learning models. By innovatively utilizing differentiable sorting combined with sparsity, the approach provides a substantial leap forward in memory-efficient attention computation—marking a significant contribution to the field of machine learning.