An Overview of "Towards Learning a Generic Agent for Vision-and-Language Navigation via Pre-training"

The paper "Towards Learning a Generic Agent for Vision-and-Language Navigation via Pre-training" introduces a pre-training approach to enhance agents in Vision-and-Language Navigation (VLN) tasks. The authors emphasize a novel pre-training and fine-tuning paradigm that produces a more generalized vision-and-LLM, aiming to improve navigation capabilities in unseen environments and tasks.

Vision-and-Language Navigation presents a complex challenge due to its reliance on multi-modal inputs, requiring the agent to interpret both visual environments and natural language instructions. Traditional methods often use a sequence-to-sequence architecture with attention mechanisms, but these approaches typically learn from scratch and do not utilize prior visual and language domain knowledge effectively. This paper seeks to address these limitations by proposing pre-training techniques attuned to the nuances of vision and language interactions.

Methodology

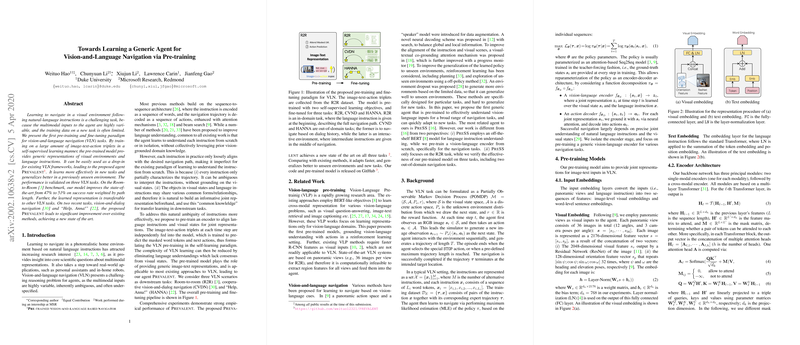

The proposed approach includes a pre-training model that integrates image-text-action triplets, using pre-training objectives that ground language instructions with visual states. The principal innovation lies in two core learning tasks: Image-attended Masked LLMing (MLM) and Action Prediction (AP).

- Image-attended Masked LLMing (MLM): This task builds on the model's ability to predict missing words within a sentence, but with the added complexity of aligning these predictions with visual inputs. The goal is to forge an association between visual states and instructions, improving the agent's ability to interpret language based on environmental context.

- Action Prediction (AP): This is designed to better guide the agent's decision-making processes. The model uses joint visual and linguistic representations to predict navigation actions, enhancing the agent's ability to plan effectively within a given environment.

These pre-training tasks are complemented by a Transformer-based multi-layer architecture, combining single-modal encoders for processing visual and text data, followed by a cross-modal encoder for integrating these representations.

Empirical Evaluation

The paper reports strong empirical validation across three VLN tasks: Room-to-Room (R2R), Cooperative Vision-and-Dialog Navigation (CVDN), and the "Help, Anna!" (HANNA) task. These tasks vary in complexity and requirements:

- Room-to-Room (R2R): Prevalent, the proposed agent, significantly outperformed existing models in terms of success rate and SPL, particularly excelling in unseen environments, demonstrating the model's generalization prowess.

- Cooperative Vision-and-Dialog Navigation (CVDN): The proposed model showed improvements in goal-directed navigation based on dialog histories, which is inherently more ambiguous compared to fixed language instructions. Pre-trained models, especially those incorporating action information, effectively transferred knowledge across tasks.

- HANNA: The model excelled in this interactive RL task, benefitting from the ability to comprehend dynamic instructions and perform subtasks intelligently, which suggests its robustness in real-world applications where human-agent interaction is critical.

Implications and Future Work

The outcomes of this research suggest substantial practical implications for developing more adaptable AI in complex environments, such as autonomous agents for indoor navigation and personal assistants. By utilizing a considerable volume of synthesized and real data, the framework leverages pre-training to enhance domain adaptability. The paper notes that the transferability of pre-trained models to out-of-domain tasks highlights not only efficiency in learning but also potential reductions in data requirements for new tasks.

Future developments are likely to explore more advanced pre-training strategies, potentially incorporating additional environmental cues and action policies. There could also be further investigation into refining the balance between computational efficiency and model robustness, given the complexities of multi-modal integrations. The advancements seen here open avenues for more nuanced navigation tasks, potentially integrating more sophisticated forms of human interaction and assistance.

In conclusion, through its innovative approach to pre-training for VLN, this paper contributes a robust framework for merging vision and language understanding, setting a new benchmark for future research and application within this domain.