Interpretability of Machine Learning-Based Prediction Models in Healthcare: An Expert Review

The paper "Interpretability of Machine Learning-Based Prediction Models in Healthcare" by Stiglic et al. offers a comprehensive analysis of the methodologies and implications surrounding the interpretability of ML models within healthcare settings. The primary focus is on ensuring that the predictive models are not only effective but also interpretable, fundamentally affecting their reliability and trustworthiness, especially in a field as critical as healthcare.

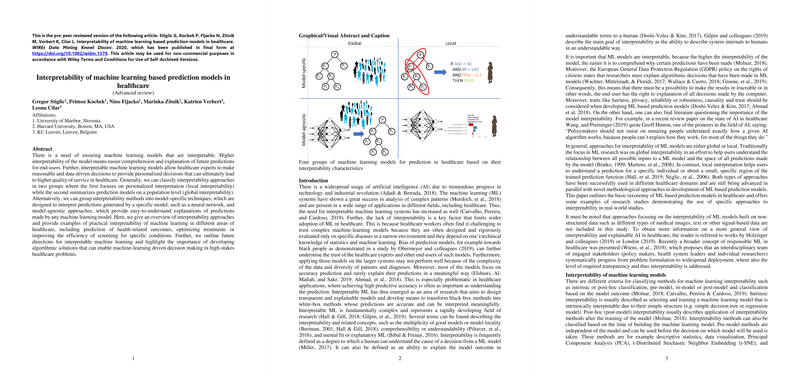

The authors categorize interpretability into two primary types: local and global interpretability. Local interpretability concerns understanding specific predictions, whereas global interpretability seeks to provide insight into the model's overall behavior. Additionally, interpretability methods are divided into model-specific techniques, which apply to specific types of models like neural networks, and model-agnostic approaches, which aim to interpret predictions from any model.

One significant challenge addressed in the paper is the complexity of ML models, often labeled "black-boxes," which hinders their acceptance among healthcare professionals. The trust deficit is attributable to the difficulty in understanding the rationale behind predictions, combined with concerns of bias, such as racial bias in predictive healthcare algorithms. The authors highlight the critical balance between predictive accuracy and interpretability, given the ethical and regulatory obligations such as the General Data Protection Regulation (GDPR) which mandates explainability of algorithmic decisions.

The paper explores various interpretability techniques, categorized based on their approach and applicability to different ML models. Model-specific techniques, like decision trees and naive Bayes classifiers, provide intrinsic interpretability through their straightforward structures. On the other hand, techniques like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) exemplify model-agnostic methods, designed to extract interpretable insights from complex models after their creation.

Practical applications of these methodologies in the healthcare domain highlight their significance. For instance, the paper cites the use of SHAP in predicting and preventing hypoxaemia during surgery, which notably increased anesthesiologists' anticipation of such events by 15%. These examples underline the potential improvements in healthcare outcomes through interpretability, yet they also point to scalability challenges, particularly computational demands when applying techniques like LIME or SHAP at scale.

Furthermore, the paper introduces innovative tools like MUSE (Model Understanding through Subspace Explanations), which combines traditional global interpretability with local perspectives. Such approaches emphasize a move toward personalized medicine, where predictions can be tailored to individual patients or subgroups with distinct characteristics.

The paper concludes with a discussion on the future of interpretability in ML for healthcare. It identifies the current gap in understanding complex models like Graph Neural Networks (GNN) and highlights the potential of tools like GNNExplainer to illuminate these models' inner workings. Future research directions include developing algorithms that balance interpretability with computational efficiency and scalability, paving the way for wide-scale ML adoption in healthcare.

In conclusion, this paper offers an extensive exploration of the interpretability of ML models in healthcare, emphasizing its critical role in fostering trust and improving patient outcomes. It serves as a call to action for further research on scalable, interpretable models, highlighting the importance of explainable AI in cultivating a reliable and ethical AI practice in healthcare environments.