Multilingual Denoising Pre-training for Neural Machine Translation

The paper "Multilingual Denoising Pre-training for Neural Machine Translation" presents an innovative approach to enhancing machine translation (MT) performance through multilingual denoising pre-training. This technique delivers notable improvements across various MT tasks. The focal point of this paper is mBART, an autoregressive sequence-to-sequence denoising auto-encoder pre-trained on extensive monolingual corpora using the BART objective function. mBART is distinct as it pre-trains a complete model by denoising full texts across multiple languages, unlike prior methods that have typically restricted their attention to the encoder, decoder, or partial text reconstructions.

Main Contributions

- mBART Architecture and Training:

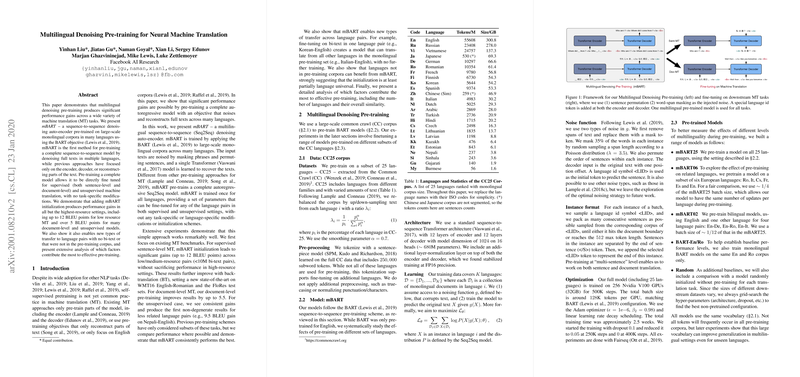

- The paper introduces mBART, comprising 12 layers each for the encoder and decoder, with a model dimension of 1024 and 16 heads.

- mBART undergoes training on a subset of 25 languages from the Common Crawl (CC). Tokenization is performed using a sentence-piece model encompassing 250,000 subword tokens.

- The training leverages two types of noise—span masking and sentence permutation—to create robust representations that generalize well across multiple tasks.

- Effectiveness Across MT Tasks:

- Extensive experimentation shows that mBART leads to performance gains in low- and medium-resource settings, achieving up to 12 BLEU points improvement for low-resource pairs.

- For document-level MT, mBART training enhances performance up to 5.5 BLEU points.

- In unsupervised MT contexts, mBART minimizes the dependency on task-specific modifications and presents the first non-degenerate results for certain language pairs, like a 9.5 BLEU point increment on Nepali-English.

- Transfer Learning Capabilities:

- mBART demonstrates remarkable transfer learning capabilities, performing well on language pairs not explicitly included during the pre-training phase.

- The paper reveals that by fine-tuning using bi-text for one language pair, the model can translate across all languages within the pre-training set.

- Analysis and Comparison:

- Detailed exploration identifies critical factors contributing to pre-training efficiency, including the number of languages and corpus balancing.

- Comparisons with existing methods (e.g., MASS, XLM) position mBART favorably, showcasing superior results in various benchmark tests.

Practical and Theoretical Implications

The implications of this research are manifold. Practically, mBART facilitates robust performance in diverse translation scenarios, including low-resource and unsupervised settings. This aligns particularly well with real-world applications where language data can be sparse or non-parallel. Theoretically, the findings extend the understanding of how comprehensive sequence-to-sequence pre-training can serve as a universal foundation for downstream MT tasks.

Future Directions

Future developments may focus on scaling mBART to include more languages, potentially creating an mBART100 model. Further research could explore the optimization of pre-training strategies, such as adjusting the balance between seen and unseen language pairs. Additionally, addressing the deployment efficiency of these models in production environments remains a critical challenge, warranting innovative solutions in model compression and resource allocation.

In summary, the paper underscores the significant advantages of multilingual denoising pre-training for neural machine translation, presenting a versatile and powerful model in mBART. The contributions and findings propel the field forward, offering a clear pathway for future advancement in both practical applications and theoretical explorations of AI-driven translation systems.