Analyzing the Reasoning Capabilities of LLMs with oLMpics

The paper, "oLMpics - On what LLM Pre-training Captures," contributes to the ongoing exploration of pre-trained LLM (LM) capabilities, especially focusing on symbolic reasoning tasks. LLMs have achieved notable success in natural language processing, albeit with insufficient understanding of their reasoning capabilities. This paper proposes eight tasks to assess these abilities, offering insights into whether performance stems from pre-training or fine-tuning processes.

Key Findings and Methodology

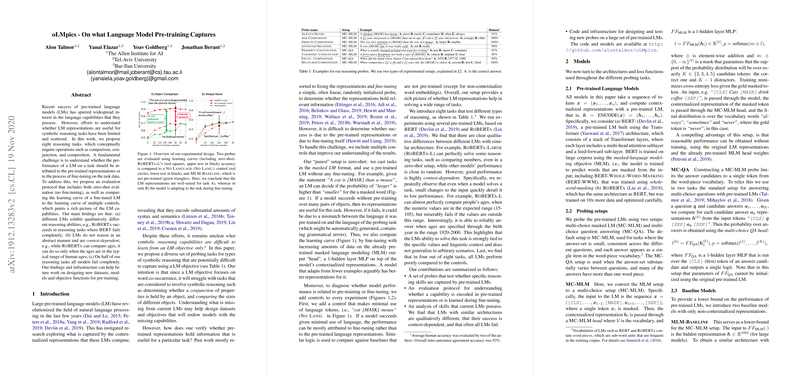

The core contribution of this paper is the introduction of eight reasoning tasks examining operations such as comparison, conjunction, and composition. Additionally, it proposes a dual evaluation protocol combining zero-shot evaluation and learning curves. This approach distinguishes knowledge embedded via pre-training from that acquired during fine-tuning, showcasing the inherent or latent capabilities of different LMs.

- Divergent Reasoning Abilities: The findings reveal that models such as BERT, RoBERTa, and others exhibit distinct reasoning competencies. For instance, RoBERTa excelled in reasoning tasks that rendered BERT ineffective, indicating qualitative differences despite structural similarities.

- Context Dependency and Limitations: The paper uncovers context dependency in LMs—highlighting that these models may perform well in typical data representations but falter outside these scenarios. For instance, models struggled with age comparisons beyond expected human age ranges, suggesting a lack of true abstraction or generalization capability.

- Performance Discrepancies: Across half the reasoning tasks, all models failed completely. This underscores existing gaps in LM capabilities—particularly concerning tasks involving uncommon or nuanced reasoning.

- Model Analysis: The methodology utilizes masked LLMing (MLM) and multi-choice question answering (MC-QA) for probing. Choices such as MC-MLM enable the paper of pre-trained representations sans fine-tuning, while learning curves assess the quick adaptation of models to these tasks with minimal additional data.

Practical and Theoretical Implications

The implications of this paper transcend the immediate understanding of model capabilities.

- Dataset and Model Design: These findings can guide the future design of datasets and pre-training objective functions to address known limitations in LM reasoning capabilities. Specifically, tasks can be crafted to encompass symbolic reasoning, which LMs struggle with.

- Improvement of Pre-training Strategies: The results suggest potential modifications to pre-training methodologies to embed more robust contextual reasoning. For instance, changes in training corpora composition or objectives could be explored to improve performance in abstract reasoning tasks.

- AI Model Evaluation: The established benchmarks provide a reference framework to evaluate current and future LMs, promoting a deeper understanding of models' reasoning abilities and limitations.

Future Developments

Future research will likely expand on the methodologies proposed—expanding tasks, evaluating new model architectures or learning paradigms, and continuously refining the distinction between knowledge gained through pre-training versus that obtained through fine-tuning. The ultimate aim remains to achieve models that understand and apply complex reasoning abstractively, independent of specific contexts.

This paper lays the groundwork for systematically examining reasoning in LMs, encouraging the development of models that are not only statistically proficient but also adept at abstract, symbolic thought processes.