An Analysis of the System Causability Scale (SCS)

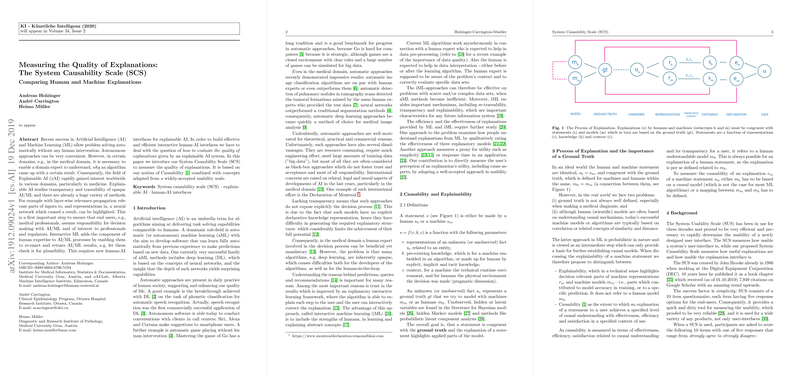

The paper "Measuring the Quality of Explanations: The System Causability Scale (SCS)" by Andreas Holzinger, Andre Carrington, and Heimo Müller focuses on an emergent area in the domain of Explainable Artificial Intelligence (xAI). The authors introduce the System Causability Scale (SCS) as a metric for evaluating the quality of explanations produced by AI systems, emphasizing the importance of human-AI interface reliability.

Core Proposition and Context

The increasing use of AI, particularly in sensitive domains such as medicine, has heightened the demand for transparency and interpretability. The opaque nature of many machine learning models, especially those based on deep learning, presents challenges in trust and accountability. Consequently, xAI has become central to ensuring that such systems provide comprehensible and justifiable outputs.

The authors argue that while existing usability metrics, such as the System Usability Scale (SUS), evaluate user interaction with systems, there is a lack of standardized methodologies for measuring the effectiveness and causality of explanations provided by AI systems. The SCS is proposed to fill this gap, offering a framework that assesses the extent to which AI explanations facilitate understanding and convey causality efficiently and effectively.

Construction of SCS

The SCS is an adaptation of the widely recognized SUS. It is structured to evaluate AI system explanations through a questionnaire using the Likert scale. Participants score explanations based on their comprehensibility, relevance, and the extent to which they aid in discerning causality. Unlike SUS, SCS explicitly focuses on the causal aspects of explanations, forming a bridge between AI outputs and user interpretation.

Implications and Findings

The utility of SCS extends beyond theoretical applications. The paper highlights its application in the medical domain, notably using the Framingham Risk Tool as a test case. The case paper demonstrates SCS's ability to gauge the quality of output explanations in a real-world context. The findings suggest that SCS can reliably measure end-user satisfaction and the understandability of AI explanations, fostering better integration of AI into human decision-making processes.

Results presented in the paper underline that explanations should be delivered in a manner that is contextually relevant and aligned with the user’s existing knowledge base. The necessity for explanations to enable users to adjust the level of detail, comprehend information with minimal external aid, and encounter no inconsistencies is also emphasized.

Future Directions and Challenges

The introduction of the SCS provides a valuable tool for the AI research community, aiding in the development of AI systems that prioritize transparency and user-centric designs. However, the paper recognizes limitations inherent in Likert scale methodologies, acknowledging the potential for disparities in perceived interval scales among respondents.

Future work as envisioned by the authors includes extensive empirical evaluations to refine the SCS and ensure its applicability across various domains beyond healthcare. Development of methods to quantify and communicate uncertainty in AI explanations could further cement the role of SCS in xAI. Broader application of the SCS promises to enhance how interactive machine learning (iML) systems are evaluated, with implications for the design of algorithms that are not only technically robust but also intuitively understandable to end-users.

In conclusion, the System Causability Scale emerges as a pivotal contribution to the field of xAI, enabling comprehensive assessment of AI systems’ explainability. The notion that causability should be directly measured emphasizes the need for AI systems to support human understanding and decision-making effectively. As AI systems become deeply integrated across various sectors, tools like the SCS will be critical in ensuring these systems operate transparently and align with human cognitive processes.