Multi-Task Vision and Language Representation Learning

The paper "12-in-1: Multi-Task Vision and Language Representation Learning" addresses the challenge of joint training across multiple, diverse vision-and-language (V{content}L) tasks. Traditional research in this area has largely consisted of specialized models dedicated to specific tasks. These specialized approaches ignore significant potential efficiencies—in both model size and performance—that can be gained by leveraging shared underlying similarities across tasks. The authors of this paper propose a unified multi-task framework capable of performing 12 tasks simultaneously, which include visual question answering (VQA), image retrieval, referring expression grounding, and multi-modal verification.

Core Contributions and Advancements

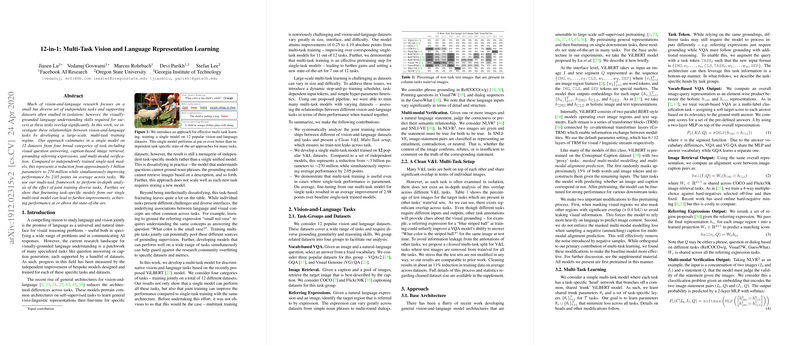

- Unified Multi-Task Framework: The proposed model integrates 12 datasets spanning four broad categories of V{content}L tasks, effectively demonstrating that a single model can learn representations that generalize across distinct problem spaces. The unified model reduces parameter count significantly from 3 billion (had each task been pursued in isolation) to approximately 270 million, a reduction by more than a factor of ten.

- Enhanced Performance: Remarkably, joint training on multiple tasks does not just maintain, but actually improves task performance. The multi-task model averagely surpasses single-task baselines by 2.05 points across tasks, with specific gains such as up to 4.19 points in certain tasks.

- Dynamic Stop-and-Go Training Scheduler: The implementation of this specialized training scheduler ameliorates the issue of overfitting, which typically arises when smaller or easier tasks are over-exposed during multi-task training, and addresses catastrophic forgetting by dynamically adjusting the training focus.

- Practical Pretraining Step: Multi-task training is proven effective as a versatile pretraining step, such that fine-tuning the model specific to individual tasks leads to further performance improvement, achieving new state-of-the-art results in some of the tasks.

Implications and Future Directions

The implications of this work are two-fold:

- Theoretical Implications: The success of multi-task models in V{content}L tasks suggests that shared visual and linguistic patterns exist across a spectrum of seemingly disparate tasks. The efficiency of a shared model architecture gives insights into developing generalizable AI systems, which could be extended to other modalities or more complex tasks.

- Practical Implications: The significant parameter reduction lowers the computational barrier for deploying sophisticated multi-task V{content}L models in real-world applications, making it feasible for resource-constrained environments like mobile devices.

The potential expansion of this framework could involve the integration of even more diverse tasks, including dynamic scene understanding tasks such as video captioning or activity recognition, to push the boundaries of multi-modal representation learning further. The modularity of the approach also advocates for future investigations into architectural innovations that could further enhance task-specific fine-tuning efficiencies and reduce task interference effects.

In conclusion, this paper sets a precedent for the scalability and adaptability of multi-task approaches in complex AI problems, offering a robust roadmap for cross-task and cross-domain learning in AI.