Noise Robust Generative Adversarial Networks

The paper "Noise Robust Generative Adversarial Networks" introduces an innovative approach to improving the robustness of Generative Adversarial Networks (GANs) against noise within training datasets. This problem is pertinent because traditional GANs often replicate training data too faithfully, including any noise present, thereby limiting their potential for generating clean outputs especially in domains where acquiring noise-free data is demanding or impractical.

Overview of Contributions

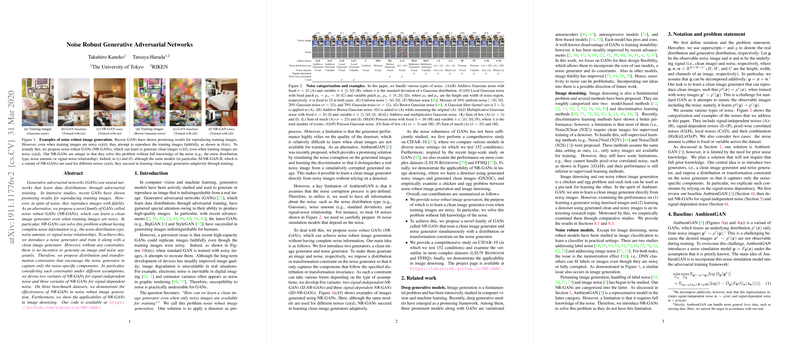

The core contribution of this work is the development of Noise Robust GANs (NR-GANs), a novel family of GANs that includes a noise generator alongside the standard image generator. Importantly, these models operate without requiring complete prior knowledge about the noise characteristics such as distribution type or noise level. By integrating both distribution and transformation constraints, NR-GANs adeptly isolate noise from the signal, ensuring that only clean images are generated.

The paper introduces five variants of NR-GANs:

- SI-NR-GAN-I and II: Tailored for signal-independent noise, these models utilize assumptions about noise invariance or distribution characteristics.

- SD-NR-GAN-I, II, and III: Designed for signal-dependent noise. SD-NR-GANs either explicitly use prior noise knowledge or learn signal-noise relationships implicitly, enabling them to handle various noise types adaptively.

Numerical Results and Evaluation

The NR-GAN framework is evaluated on three benchmark datasets: CIFAR-10, LSUN Bedroom, and FFHQ. The results showcase that NR-GANs consistently outperform both traditional GAN models and denoiser-preprocessing GAN systems across 152 experimental conditions. Among the variants, SI-NR-GAN-II and SD-NR-GAN-II perform robustly across various noise settings, demonstrating their flexibility and effectiveness even when noise assumptions are partially violated.

Implications and Future Directions

In practice, NR-GANs open new avenues for applications that demand clean image generation from noisy datasets, which are common in medical imaging, astronomy, and other domains with inherent observational noise. Theoretically, this work contributes significantly to the understanding of noise modeling within generative frameworks, suggesting that noise and data generators can be decoupled using adversarial training and thoughtful constraints.

Looking forward, future research could address the training dynamics where weak constraints lead to ineffective noise isolation, particularly in complex datasets. Moreover, exploring NR-GAN integration with other generative models, such as autoregressive or flow-based models, could widen their applicability and enhance their robustness further.

In summary, this paper provides a substantive advancement in enhancing noise robustness in GANs, offering practical solutions to longstanding challenges in generative modeling due to noisy training data. The methodological innovations and comprehensive evaluation highlight a significant stride towards more resilient and reliable GAN systems in noise-prone environments.