Analysis of "Rigging the Lottery: Making All Tickets Winners"

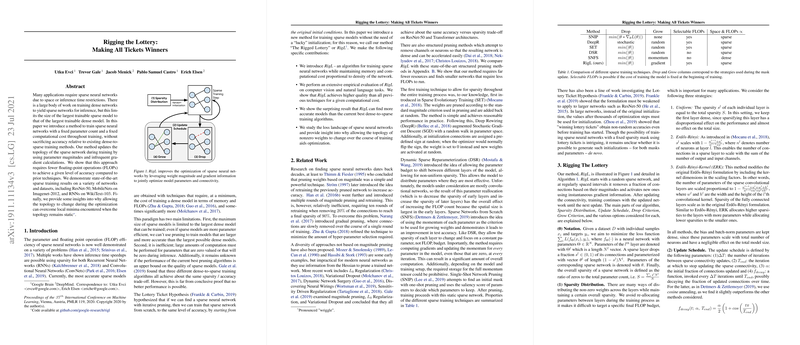

The paper, "Rigging the Lottery: Making All Tickets Winners," introduces RigL, an algorithm designed to train sparse neural networks efficiently while maintaining a fixed parameter count and computational cost throughout the training process. The key innovation of RigL lies in its ability to update the topology of the sparse network using parameter magnitudes and infrequent gradient calculations. This method demonstrates superior accuracy compared to existing dense-to-sparse training techniques and accomplishes reduced computational requirements in terms of floating-point operations (FLOPs).

Core Contributions

The authors outline several critical contributions of RigL:

- Algorithmic Development: RigL is presented as a novel algorithm that enables sparse neural networks to be trained without requiring memory and computational costs proportional to those of dense networks.

- Empirical Evaluation: RigL's performance is evaluated on various tasks, including computer vision and natural language, achieving higher accuracy relative to prior methods for a given computational budget.

- Comparison with Dense-to-Sparse Methods: RigL surprisingly uncovers more accurate models than those obtained via current best dense-to-sparse training algorithms.

- Optimization Insights: The paper provides insights into the loss landscape of sparse neural networks, noting that changing the topology of nonzero weights enhances optimization processes, potentially overcoming local minima that static topologies encounter.

Numerical Results and Claims

The paper reports that RigL achieves state-of-the-art performance in sparse network training, as evidenced by its impressive results on datasets such as ImageNet-2012 and WikiText-103. For instance, at high levels of sparsity (e.g., 96.5%), RigL outperforms magnitude-based iterative pruning by a considerable margin, while requiring fewer FLOPs to achieve similar accuracy levels. The method is particularly noteworthy for being able to train 75% sparse MobileNet models without any performance loss.

Theoretical and Practical Implications

RigL's development holds significant implications in both theoretical and practical domains:

- Efficiency in Training Large Sparse Models: By elegantly managing computational resources during training, RigL positions itself as a potent tool for models constrained by computational or memory limitations, such as those deployed in edge computing environments.

- Insights into Sparse Neural Network Optimization: The observed ability of RigL to adjust network topologies dynamically supports further exploration into adaptive and flexible model training approaches, shedding light on potentially novel methods to traverse complex loss landscapes efficiently.

Future Prospects

As RigL provides a method for efficient sparse network training, future research could delve into expanding this strategy into unexplored hardware and software platforms. The method also opens avenues for developing more sophisticated dynamic sparsification techniques and applying these to larger models that may currently be impracticable due to resource constraints. Additionally, exploring the theoretical bounds of sparse network performance could yield further innovations in network design and architecture.