Overview of "CCNet: Extracting High Quality Monolingual Datasets from Web Crawl Data"

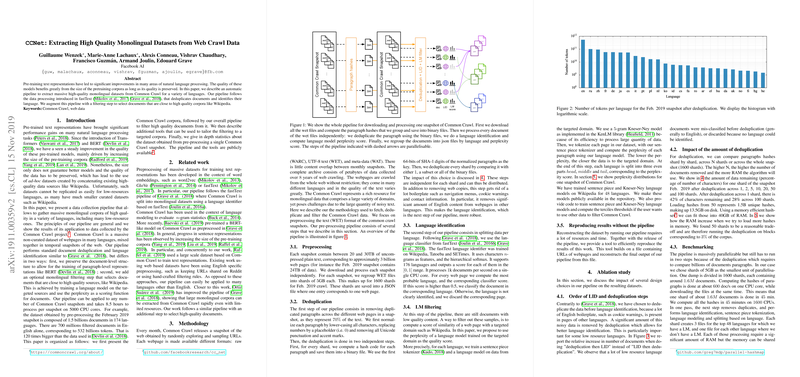

The paper "CCNet: Extracting High Quality Monolingual Datasets from Web Crawl Data" presents a systematic approach to enhance the quality and expansiveness of pre-training corpora for multilingual models. The authors address a significant challenge in NLP by developing a pipeline that extracts large-scale monolingual datasets from Common Crawl, which includes data from a multitude of languages.

Methodology

The methodology leverages an automatic pipeline, which builds upon the data processing techniques introduced by fastText. This includes deduplication of documents and identification of their respective languages. A crucial step is the introduction of a filtering mechanism that identifies and selects documents akin to high-quality corpora, such as Wikipedia. This approach entails training a LLM on source domains and utilizing the resulting perplexity scores as a metric for document selection.

The pipeline is designed for scalability, processing each Common Crawl snapshot independently. A single snapshot, exemplified by the February 2019 collection, incorporates 1.5 billion documents across 174 languages, demonstrating the pipeline's capacity to handle extensive data. Of particular note is the English corpus, consisting of over 700 million filtered documents and 532 billion tokens, which is considerably larger than prior datasets used for similar NLP applications.

Experimental Results

The paper details various experiments to validate the efficacy of the proposed approach. The extracted monolingual corpora are tested by training LLMs like BERT and evaluating their performance on downstream tasks such as XNLI. The results reveal noticeable improvements in LLM performance, especially for low-resource languages where traditional high-quality datasets are insufficient.

Implications

The implications of this work are both practical and theoretical. Practically, the pipeline enables the creation of vast and diverse datasets that are critical for developing robust multilingual NLP models. This is particularly advantageous for less-resourced languages that traditionally suffer from data scarcity.

Theoretically, the use of document-level structure retention and perplexity-based filtering contributes to the ongoing discourse on efficient data utilization for model training. By showing that more tailored curation processes can lead to performance gains, this work encourages further research into data filtering techniques that optimize training outcomes for models like BERT.

Future Directions

Given the demonstrated success of the filtering methodology, future research could explore:

- Multi-Domain Filtering: Investigating the effects of different high-quality domains on LLM performance.

- Adaptive Thresholds: Refining LLM perplexity thresholds in a more dynamic fashion based on ongoing model feedback.

- Extension to More Languages: Scaling the approach to cater to even more languages, potentially assisting in bridging gaps within NLP capabilities worldwide.

Conclusion

The proposed CCNet pipeline offers a comprehensive solution to enhance the quality of web crawl data for NLP applications. By systematically filtering and selecting high-quality data, the approach not only advances the efficacy of LLMs but also sets a foundation for future explorations in multilingual data processing. This work is a valuable contribution to the field, promoting better utilization and accessibility of diverse linguistic resources.