Structured Pruning of LLMs: An Analytical Overview

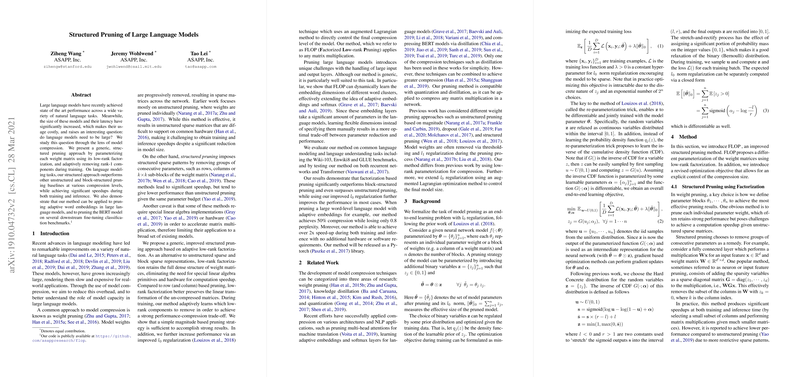

The work presented in "Structured Pruning of LLMs" addresses the pressing issue of model size and computational inefficiency associated with large-scale LLMs. These models, although state-of-the-art in diverse NLP tasks, remain costly in terms of resources, prompting a critical examination of their actual requirements for such size. This paper is focused on structured pruning through adaptive low-rank factorization as a means to alleviate the computational burden while preserving model performance.

Structured vs. Unstructured Pruning

The research opens with a comparison between structured and unstructured pruning techniques. Unstructured pruning tends to remove individual weights, resulting in sparse matrices that are supported insufficiently by standard hardware, thus failing to offer expected speedups during training and inference. Structured pruning, contrastingly, removes groups of parameters in structured patterns, ensuring better usability on existing hardware but often at the cost of performance. This paper's contribution lies in its adoption of low-rank factorization to streamline structured pruning by maintaining dense matrix formats, reducing the complexity of implementation, and enhancing operational efficiency.

Methodology

The proposed method, termed Factorized Low-rank Pruning (FLOP), achieves efficient model compression via low-rank factorization of weight matrices into components, enabling adaptive pruning of rank-1 components during training. This approach is not only applicable to matrix operations but also extends to compressing embedding layers and softmax components—a substantial component of parameter overhead in NLP models, especially with extensive vocabularies.

A noteworthy aspect of FLOP is its use of magnitude-based pruning combined with regularization enhanced through an augmented Lagrangian method. This allows models to be pruned to exact desired sizes, a critical requirement in practical applications where computational resources are limited.

Numerical Results and Performance

Empirically, the FLOP approach demonstrates superiority over unstructured and other structured pruning techniques across several benchmarks, including Wiki-103 and the Enwik8 datasets. Notably, the authors report achieving a 50% compression rate with only minimal loss in model perplexity—down by only 0.8 points—highlighting the efficacy of their proposed structured pruning methodology.

The results also underscore the adaptability of FLOP, effectively adjusting embedding dimensions for differing word clusters, thereby optimizing parameter usage dynamically. This adaptability is illustrated through substantial retention of performance on GLUE benchmarks during the fine-tuning of a pruned BERT model, despite a significant reduction in parameter count.

Implications and Future Directions

The implications of this research are significant both practically and theoretically. In practice, reduced model sizes entail lessened computational and storage requirements, facilitating the deployment of LLMs in resource-constrained environments without severely compromising accuracy. Theoretically, this work invites further exploration into the balance between model size and performance, potentially challenging prevailing assumptions regarding the need for excessively large models to achieve high performance.

Furthermore, the methodology opens new avenues in AI research, particularly concerning the integration of compression techniques with other model optimization strategies like knowledge distillation and quantization. Future developments could focus on enhancing compatibility with various neural architectures and accelerating training processes, advancing the applicability of compressed LLMs across more diverse AI landscapes.