Learning De-biased Representations with Biased Representations

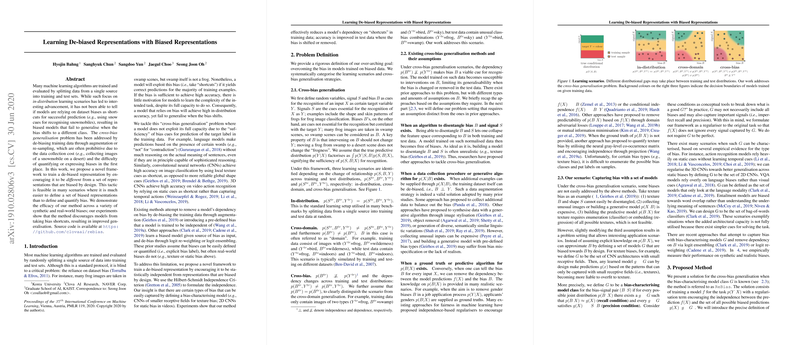

The paper "Learning De-biased Representations with Biased Representations" addresses the issue of model bias in machine learning by proposing a framework—ReBias—that enhances cross-bias generalization. The central assertion is that many models, which split data into training and test sets from a single source, utilize bias-related shortcuts for successful prediction (e.g., leveraging snow cues for identifying snowmobiles), leading to an inability to generalize when biases change. The authors propose avoiding the costly processes of augmenting or re-sampling datasets (e.g., capturing images of snowmobiles in deserts) by introducing a methodology that utilizes biased representations to learn de-biased ones.

Problem Definition

The crux of the paper lies in distinguishing various training scenarios best suited for preventing biases. The authors introduce the cross-bias generalization problem and discuss the conventional methods available to address this issue. They critique these methods' applicability largely due to defining bias or the prohibitive data collection and computational costs. The proposition here is to define a model class, characterized by bias, and then learn a model by encouraging it to diverge statistically. This approach is seen as more feasible when biases can be more readily represented through model design rather than explicit data definitions.

Proposed Method

The ReBias framework is built upon regularizing a model by enforcing its independence from a set of biased models using the Hilbert-Schmidt Independence Criterion (HSIC). The purpose is to create a model that becomes distinct enough, statistically speaking, from one that is traditionally biased. The regularization approach here is a form of minimax optimization aimed at encouraging the model to run away from biased representations crafted deliberately. This method effectively circumvents the dependency on augmentation or explicit definitions of bias. The authors theoretically justify this method by suggesting that HSIC regularization enforces models to learn different types of invariances, promoting de-biased representation learning.

Experimental Validation

The authors validate ReBias across several datasets: Biased MNIST, ImageNet, and video action recognition. These experiments illustrate the efficacy of the proposed method:

- Biased MNIST: Here, the authors show how color as a bias can distort digit recognition. The results consistently indicate that ReBias delivers robust generalization even as the correlation of bias in training datasets is varied. The evidence suggests that ReBias leads to marked improvement in performance under an unbiased metric.

- ImageNet: For realistic texture biases in images, ReBias demonstrates improved model performance and generalizability. By applying proxy measures like texture clustering, the paper highlights how models can achieve better accuracies beyond conventional biased evaluation protocols.

- Action Recognition: This domain is characterized by static biases captured in conventional datasets like Kinetics. By utilizing Mimetics for evaluation, the authors prove the superior generalizability of ReBias in recognizing actions unaffected by static cues.

Implications and Future Directions

The primary implication of this research is a shift in focus towards architectural design to address the bias problem, rather than dependency on expansively labeled or bespoke datasets. This shift holds substantial promise in enabling models to generalize over varied scenarios encountered in practice. The potential for ReBias extends beyond the domains tested and bears exploration in natural language understanding and cross-modal tasks, such as visual question answering.

The work opens room for further theoretical exploration of HSIC in non-linear systems and its role in diverse applications. Additionally, improvements in optimization techniques in ReBias learning emerge as a crucial line of inquiry to enhance its efficiency and applicability.

In conclusion, this paper provides a structured methodology for tackling dataset bias, encouraging models to harness their inherent capacity to learn unbiased representations without relying on additional data engineering or augmentation strategies. This work stands to significantly influence areas necessitating robust generalization across real-world bias variabilities.