Federated Learning in Mobile Edge Networks: A Comprehensive Survey

The paper under review, titled "Federated Learning in Mobile Edge Networks: A Comprehensive Survey," provides an extensive exploration of Federated Learning (FL) as an important paradigm for enabling collaborative learning while preserving data privacy in mobile edge networks. This document is tailored for experienced researchers looking for in-depth technical knowledge on the nuances of FL and its practical implications.

Introduction

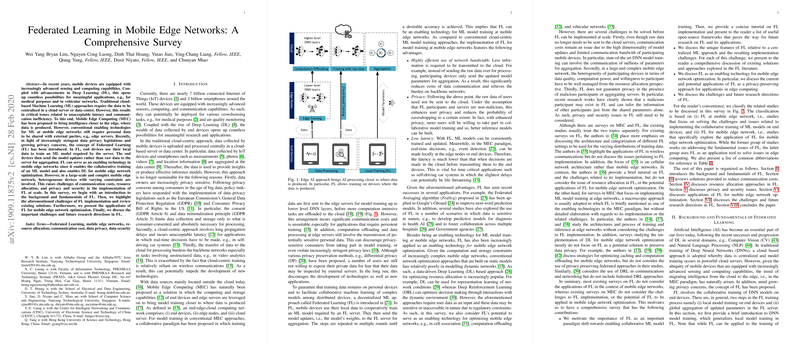

The survey begins by emphasizing the ever-increasing computational capabilities of mobile devices and the corresponding advancements in Deep Learning (DL). Traditional centralized Machine Learning (ML) approaches are highlighted for their limitations, such as unacceptable latency and communication inefficiency. Mobile Edge Computing (MEC) is proposed as a solution, yet this still involves sharing personal data with external servers. In light of stringent data privacy regulations and growing user concerns, the concept of FL is introduced. FL allows local model training on user devices, sending only model updates for aggregation, thereby alleviating privacy concerns. However, FL presents several challenges, including managing communication costs, heterogeneous device constraints, and privacy/security issues.

Communication Cost

The paper explores various strategies for reducing communication costs in FL, a fundamental challenge given the high dimensionality of model updates:

- Edge and End Computation: Strategies include increasing local computation to reduce the number of communication rounds. For instance, the FedAvg algorithm enhances computational effort on devices by allowing more local updates before each communication round, leading to significant reductions in communication rounds.

- Model Compression: Techniques like structured updates and sketched updates are explored to reduce the size of data transmitted. These methods involve compressing the model updates through techniques like quantization and sparsification, enabling significant communication cost savings albeit with potential sacrifices in model accuracy.

- Importance-based Updating: Approaches like the Communication-Mitigated Federated Learning (CMFL) algorithm selectively transmit only the most relevant updates, thus reducing communication overhead and potentially improving model accuracy by ignoring irrelevant updates.

Resource Allocation

FL involves heterogeneous devices with varying resource constraints, necessitating intelligent resource allocation strategies:

- Participant Selection: Protocols like FedCS and Hybrid-FL address the training bottleneck by selecting participants based on computational capability and data distribution, reducing the likelihood of stragglers slowing down the training process.

- Joint Radio and Computation Resource Management: Techniques such as over-the-air computation facilitate integrated communication and computation, significantly reducing communication latency.

- Adaptive Aggregation: To manage the dynamic resource constraints, adaptive aggregation schemes are proposed, which vary the global aggregation frequency to optimize resource usage while maintaining model performance.

- Incentive Mechanism: Given the resource-intensive nature of FL, incentive mechanisms are critical. Techniques from contract theory and Stackelberg game frameworks are employed to motivate high-quality data contributions from participants while mitigating the adverse effects of information asymmetry.

Privacy and Security

The paper outlines potential vulnerabilities in FL and proposes several countermeasures:

- Privacy: Despite the decentralized approach, model updates can still leak sensitive information. Mitigation strategies include Differential Privacy (DP) and collaborative training models that selectively share model parameters. For instance, differentially private stochastic gradient descent adds noise to updates, preserving privacy.

- Security: The robustness of FL systems against adversarial attacks like data and model poisoning is discussed. Techniques such as FoolsGold and blockchain-based frameworks enhance security by identifying and isolating malicious participants.

Applications in Mobile Edge Networks

Beyond enhancing FL implementation, the paper discusses applications of FL in edge networks:

- Cyberattack Detection: FL is used for collaborative intrusion detection in IoT networks, ensuring data privacy while improving detection accuracy.

- Edge Caching and Computation Offloading: DRL combined with FL optimizes caching and offloading decisions, maximizing resource usage efficiency.

- Base Station Association: By employing FL, user data privacy is preserved while optimizing base station associations in dense networks to reduce interference.

- Vehicular Networks: FL facilitates collaborative learning in vehicular networks for applications like traffic management and energy demand forecasting without compromising user privacy.

Challenges and Future Research Directions

The paper concludes by outlining several future research directions, including handling dropped participants, improving privacy measures, addressing unlabeled data, and managing interference among mobile devices. Additionally, it suggests the exploration of cooperative mobile crowd ML schemes and combined algorithms for communication reduction.

Conclusion

Overall, this comprehensive survey on FL in mobile edge networks provides valuable insights into the potential and challenges of FL. It underscores the necessity for continued research and development to address the emerging implementation issues, thereby advancing collaborative learning paradigms while preserving privacy and optimizing resource use.