Joey NMT: A Minimalist NMT Toolkit for Novices

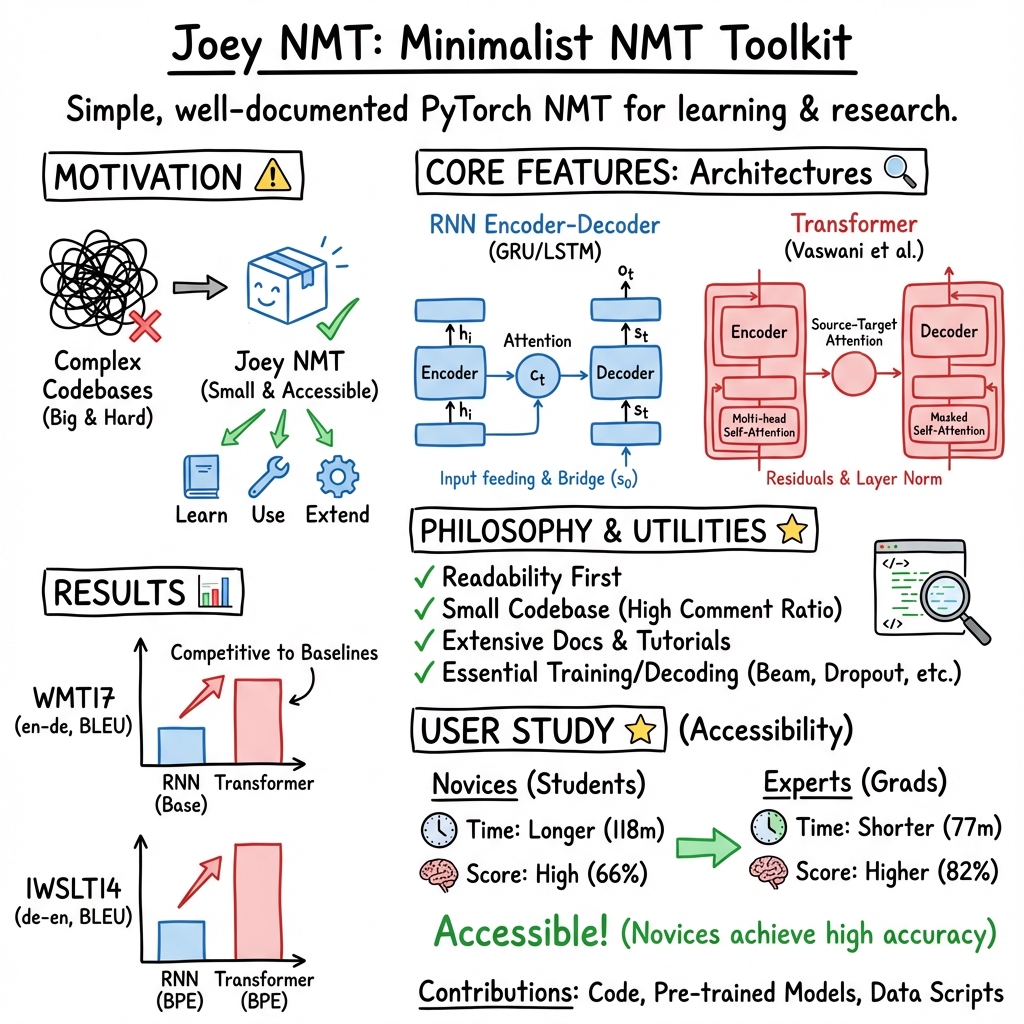

Abstract: We present Joey NMT, a minimalist neural machine translation toolkit based on PyTorch that is specifically designed for novices. Joey NMT provides many popular NMT features in a small and simple code base, so that novices can easily and quickly learn to use it and adapt it to their needs. Despite its focus on simplicity, Joey NMT supports classic architectures (RNNs, transformers), fast beam search, weight tying, and more, and achieves performance comparable to more complex toolkits on standard benchmarks. We evaluate the accessibility of our toolkit in a user study where novices with general knowledge about Pytorch and NMT and experts work through a self-contained Joey NMT tutorial, showing that novices perform almost as well as experts in a subsequent code quiz. Joey NMT is available at https://github.com/joeynmt/joeynmt .

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What This Paper Is About

This paper introduces Joey NMT, a simple, beginner-friendly toolkit that helps computers translate from one language to another using neural networks. It’s built to be small, easy to read, and easy to change, so students and newcomers can learn how machine translation works without getting lost in a huge codebase. Even though it’s simple, it can still reach translation quality similar to bigger, more complex systems.

The Main Questions the Paper Asks

- Can we build a clear, minimal translation toolkit that beginners can actually understand and use?

- Will this small toolkit still perform well on standard translation tests compared to popular, large toolkits?

- Do beginners learn and understand the code well after a short tutorial, and how do they compare to experts?

How the Researchers Approached It

A quick idea of what NMT is

Neural Machine Translation (NMT) is like training a very smart “translator robot.” It reads a sentence in one language and writes it in another, learning from lots of example translations.

- RNNs (Recurrent Neural Networks): Think of a person reading a sentence word by word, remembering what came before.

- Transformers: Imagine a reader who can look at the whole sentence at once and pay attention to the most important words, even if they’re far apart. Transformers do this with “attention,” which is like a spotlight over words that matter for the current translation step.

What Joey NMT includes

Joey NMT is written in PyTorch and focuses on the essentials:

- Popular model types: RNNs and Transformers

- Attention (the “spotlight” that helps focus on the right words)

- Helpful training tools like dropout, learning-rate scheduling, and early stopping

- Decoding methods like beam search (trying several promising sentence continuations to pick the best one)

- Clear documentation, comments, and small, readable code

The authors follow the “80/20 rule”: get about 80% of the quality with about 20% of the code size.

How they tested it

They ran three kinds of evaluations:

- Code simplicity check:

- They measured lines of code and comments. Joey NMT has far fewer lines than big toolkits, but a higher comment-to-code ratio, making it easier to read.

- Translation benchmarks:

- They trained Joey NMT on standard tests (like WMT17 and IWSLT14) and compared its scores to well-known toolkits. Quality was measured with BLEU, a common score that compares machine translations to human ones. Higher is better.

- User study (novices vs. experts):

- Novices (undergraduate students with basic ML/PyTorch knowledge) and experts (graduate students with NMT experience) read a short tutorial and then took a code quiz.

- They measured how long participants took and how many answers they got right.

The Main Findings and Why They Matter

- Simple but strong: Joey NMT’s translation quality is close to that of larger, more complex toolkits on standard tests. In plain terms: even though the code is small and simple, it performs really well.

- Easy to learn: The codebase is much smaller and better commented than most alternatives, which helps beginners understand how things work.

- Novices do well: In the user study, beginners took more time than experts to answer questions, but they scored only a bit lower overall. That means a newcomer can reach a solid understanding after a short, self-guided tutorial.

- Practical for students: The toolkit is designed to run with limited resources (like a single GPU), which is perfect for classroom projects or small research ideas.

What This Could Mean Going Forward

- Better learning and teaching: Teachers can use Joey NMT to help students understand translation models without overwhelming them.

- Faster prototyping: Beginners can more quickly try new ideas (like small changes to attention or training settings) without wrestling with massive codebases.

- More accessible research: Clear, minimal code lowers the barrier to entry, helping more people contribute to NMT research and applications.

Key terms (in simple words)

- Attention: A spotlight that helps the model focus on the most relevant words when translating.

- RNN: A model that reads sentences one word at a time, carrying memory forward.

- Transformer: A model that looks at all words at once and uses attention to find relationships.

- Beam search: A way to try several good translation options at each step and keep the best ones.

- BLEU score: A score that tells how close a machine translation is to a human translation (higher is better).

In short, Joey NMT shows that you don’t need a huge, complicated system to learn, teach, or even do strong machine translation—clear, well-designed tools can take you a long way.

Collections

Sign up for free to add this paper to one or more collections.