Analysis of Facebook FAIR's WMT19 News Translation Task Submission

The paper delineates the methodology employed by Facebook AI Research in their participation for the WMT19 shared news translation task, targeting English ↔ German and English ↔ Russian language pairs. Leveraging and enhancing upon the techniques from the previous year's submission, the team focuses on improvements in data handling, model architecture, and translation quality assessment.

Key Methodologies

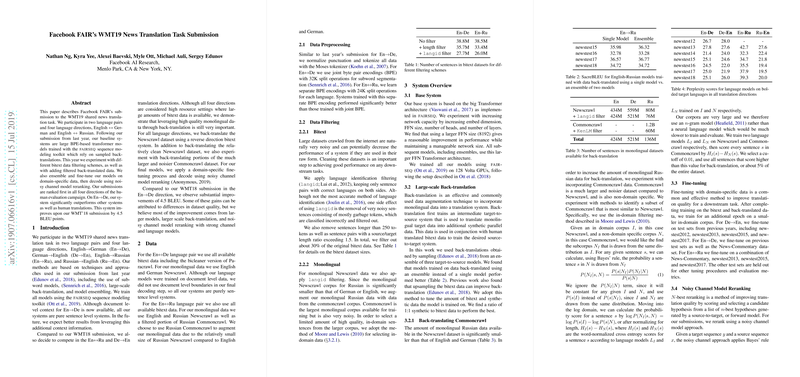

- Data Filtering and Preprocessing: The team adopted rigorous data cleaning mechanisms to handle the noisiness inherent in large datasets derived from online sources. For bitext data, the language identification filtering (langid) helped remove sentences with incorrect language classifications. Sentences longer than 250 tokens and those with a severe length mismatch ratio were filtered out, purging about 30% of the original dataset. Importantly, filtering demonstrated a tangible improvement in performance metrics.

- Back-Translation and Monolingual Data Augmentation: The utility of back-translation is reinforced with large-scale translation of monolingual target data, used alongside bitext to bolster the translation model's capabilities. For Russian-English pairs, augmenting with data from Commoncrawl was essential due to the paucity of Russian monolingual datasets relative to other languages.

- Model Architecture and Training: The baseline systems are grounded in the transformer model architecture leveraging byte pair encodings (BPE) for subword segmentation. Notably, the research team expanded the Feed-Forward Network (FFN) dimensions, which contributed positively to the model's performance metrics. The models were trained using the fairseq toolkit on substantial computing resources, including 128 Volta GPUs.

- Noisy Channel Model Reranking: Implementing a noisy channel model for reranking, the team applied Bayes' rule to enhance hypothesis selection from n-best lists generated by forward models. The reranking process, corroborated by LLMs trained on large monolingual corpora, delivered notable enhancements to translation quality.

- Fine-tuning and Ensemble Approaches: Final models underwent fine-tuning with domain-specific datasets, resulting in further performance improvements. Model ensembles, formed by combining outputs from multiple high-capacity models, were crucial strategies for achieving high BLEU scores.

Results and Observations

The results articulated in the paper reflect significant advances on the previous year's benchmarks across all four language directions. Notably, their English-to-German system exhibited a 4.5 BLEU point improvement over the WMT'18 submission, symbolizing a significant leap in translation efficacy. The experimental inclusion of noisy datasets for back-translation, such as Commoncrawl, was rigorously assessed and yielded beneficial output quality improvements.

Implications and Future Directions

The methodologies and results presented hold substantial implications for the field of machine translation. The efficacy of data filtering and augmentation suggests a pathway for enhancing model performance even in high-resource settings. The adoption and efficacy of the noisy channel model reranking introduce a promising area for further exploration, particularly in refining LLMs which can potentially bridge remaining performance gaps.

Looking forward, the integration of document-level context remains a promising avenue for potential enhancement in translation performance. The research highlights that while current models are sentence-level, leveraging document context could further optimize translation coherence and quality.

In conclusion, the paper encapsulates substantial strides made by Facebook FAIR in advancing machine translation systems, emphasizing data preprocessing, model capacity expansion, and effective utilization of monolingual data through back-translation. While the team achieved top rankings in the human evaluation for the WMT19 task, the research insights are poised to guide future enhancements in LLM development and translation quality.