Overview of "Hello, It's GPT-2 - How Can I Help You? Towards the Use of Pretrained LLMs for Task-Oriented Dialogue Systems"

This paper, co-authored by Paweł Budzianowski and Ivan Vuli, investigates the application of large, pretrained generative LLMs, specifically those from the GPT family, to task-oriented dialogue systems. The work is motivated by the considerable data requirements and complexity traditionally associated with training task-oriented dialogue models, which have to learn various aspects of language and task-specific behaviors with limited data.

Context and Motivation

The landscape of conversational AI systems is largely divided into task-oriented systems and open-domain systems. Task-oriented dialogue systems usually comprise several independently trained modules for tasks such as language understanding and response generation, optimized for specific tasks and domains. In contrast, open-domain conversational models often leverage substantial amounts of unannotated data, resulting in models that, while data-rich, can produce incoherent and unreliable outputs.

The fusion of neural end-to-end training paradigms in dialogue systems has yielded unpredictable results, and the challenge of fixed and contextually limited responses in retrieval-based systems persists. Hence, the researchers aimed to explore the application of pretrained LLMs, such as GPT and GPT-2, leveraging their potential for language understanding and generation in structured dialogue settings.

Methodology

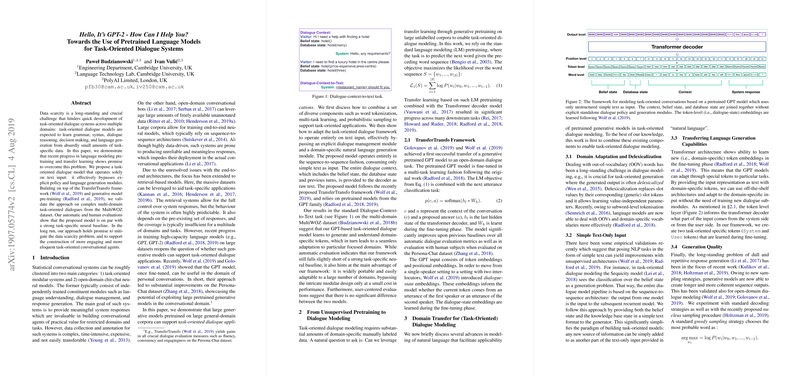

The authors present a model that views task-oriented dialogue as a text-only input problem, thereby eliminating the need for explicitly defined dialogue management or natural language generation modules. The model is built upon the TransferTransfo framework using GPT-style pretrained models. By encoding task-specific components, such as belief states and database queries, as natural language text, the framework simplifies the typical architecture of dialogue systems and promotes adaptability across different domains.

This approach was evaluated using the MultiWOZ dataset, a comprehensive corpus that encompasses dialogues across multiple domains, allowing for assessment of cross-domain adaptability and generation quality.

Results

The paper reports that the proposed methodology achieves competitive performance on automatic evaluation metrics compared to task-specific baselines, albeit with a noted decrease in certain task completion metrics. The experimental results also highlight the effectiveness of sophisticated sampling strategies like nucleus sampling in enhancing the generative diversity of responses, which could sidestep the issue of dull and repetitive outputs typical when using greedy decoding strategies.

Further, human evaluation results distributed across different models showed diverse preferences, suggesting parity in perceived response quality between the GPT-based systems and traditional baselines among users. This suggests that while numerical outcomes from the automatic evaluations showcased mixed results, human-centered evaluations reiterated the potential for using pretrained generative models in real-world task-oriented applications.

Implications and Future Directions

The use of GPT-type models for task-oriented conversation systems introduces a paradigm shift in how these systems can be conceptualized and deployed. By framing dialogue management and generation as a text transformation problem, this research underscores the potential versatility of pretrained LLMs in handling domain-specific tasks without extensive architecture tuning.

Potential future directions highlighted by this research involve enhancing these models' domain adaptation capabilities, improving sampling techniques for natural language generation, and exploring ensemble methods to combine strengths of different models for optimized dialogue performance. The paper points towards a future where task-oriented conversational AI could benefit from the scalability and expressive capabilities of LLMs, thus enabling richer, more flexible user interactions with minimal domain-specific data constraints.

Overall, this research contributes to an evolving dialogue within the AI community on the optimal exploitation of large-scale neural LLMs for structured, task-focused conversational AI.