Commonsense Transformers for Automatic Knowledge Graph Construction

This paper introduces COMET (COMmonsense Transformers), a novel approach for constructing commonsense knowledge bases (KBs) using large-scale pre-trained LLMs. The research focuses on the Automatic Knowledge Base Construction for two prominent commonsense knowledge graphs: Atomic and ConceptNet. The primary contribution involves leveraging generative models to automatically generate commonsense knowledge, marking a deviation from the conventional, template-based KB methods.

Introduction and Motivation

The paper is grounded on the premise that commonsense knowledge, unlike traditional encyclopedic knowledge, is not easily captured through structured schemas. Instead, commonsense KBs require the storage of knowledge in loosely-structured, open-text descriptions. This work thus positions the acquisition of generative models that can craft such open-text descriptions as a fundamental step towards automating commonsense KB completion.

Methodology

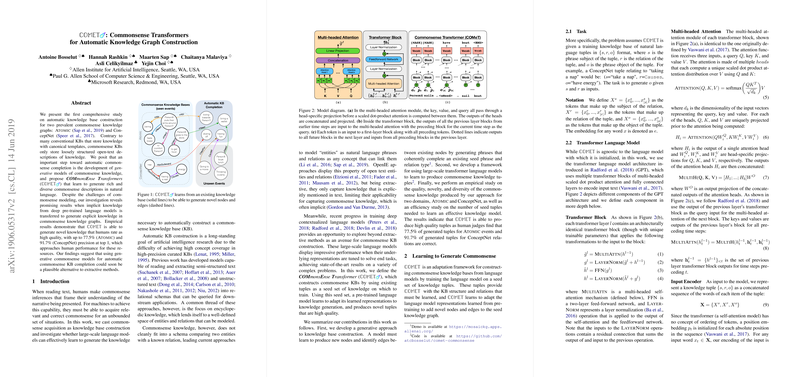

COMET capitalizes on the transformer architecture to produce novel and diverse commonsense tuples. Specifically, the method involves training a generative LLM on seed sets of knowledge from existing KBs. The model learns to adapt these representations to generate new, high-quality tuples in the format , where is the subject phrase, is the relation, and is the object phrase. COMET utilizes the GPT architecture, leveraging its self-attention mechanism and multi-headed attention modules to handle the natural language input effectively.

Experiments

The empirical evaluation of COMET was conducted on two datasets: Atomic and ConceptNet.

- Atomic Dataset: This dataset includes 877K tuples encapsulating social commonsense knowledge. The paper utilized various metrics, including BLEU-2 and perplexity scores, supplemented by human evaluations to measure the quality and novelty of the generated tuples.

- ConceptNet Dataset: A subset of ConceptNet 5, this dataset contains tuples obtained from the Open Mind Common Sense entries. Performance was assessed using perplexity, automatic scoring with a pre-trained Bilinear AVG model, and human evaluations.

Results

Significant findings from the experiments include:

- Performance: COMET demonstrated superior performance when benchmarked against state-of-the-art models. For Atomic, the model's improvements were reflected through a 51% relative enhancement in BLEU-2 scores and an 18% improvement in human evaluation metrics.

- Human-like Performance: Human evaluations indicated that up to 77.5% of COMET-generated tuples for Atomic and 91.7% for ConceptNet were deemed correct by human judges.

- Novelty: The model produced a substantial number of novel tuples, indicating its capacity to generate new knowledge rather than recycling training data.

- Effect of Pre-training: Pre-trained LLMs significantly outperformed models trained from scratch, underscoring the importance of leveraging large-scale language data for commonsense inference.

Implications

The implications of COMET’s strong performance are twofold:

- Practical: COMET offers a scalable, automatic method to augment existing commonsense KBs, significantly reducing the manual effort involved in KB curation. This enables the creation of richer and more comprehensive KBs.

- Theoretical: The paper advances the understanding of how generative models can be repurposed for the task of commonsense knowledge generation, showcasing the utility of LLM pre-training in extracting implicit knowledge.

Future Directions

Future avenues for exploration include:

- Extending COMET to other types of knowledge bases beyond commonsense, potentially integrating Open Information Extraction (OpenIE) approaches.

- Investigating mechanisms for integrating human evaluators into the loop to refine and validate the generated knowledge.

- Exploring hierarchical or multi-task training setups to optimize the model further.

Conclusion

The paper presents a compelling case for using generative transformer models in the field of commonsense KB construction. By demonstrating significant improvements in both quality and novelty of generated knowledge, COMET represents a step forward in the automation of commonsense reasoning systems, offering a robust alternative to traditional extractive methods. The experimental results validate the model's efficacy, positioning it as a potent tool for enhancing AI systems' ability to understand and reason about the world in a human-like manner.