Communication-Efficient Momentum Stochastic Gradient Descent for Distributed Non-Convex Optimization

The paper "On the Linear Speedup Analysis of Communication Efficient Momentum SGD for Distributed Non-Convex Optimization" investigates the performance of distributed momentum stochastic gradient descent (SGD) methods in the context of non-convex optimization. The paper addresses a significant gap in the literature concerning the effectiveness and efficiency of momentum-based methods, particularly under distributed settings, where communication overhead is often a primary bottleneck.

Key Contributions

- Algorithmic Development: The paper proposes a distributed momentum SGD method that ensures communication efficiency while achieving linear speedup concerning the number of computing nodes. This is a notable advancement as momentum methods are favored in practice for their faster convergence and better generalization properties, particularly in training deep neural networks.

- Theoretical Guarantees: The authors rigorously prove that the proposed momentum-based algorithm achieves an convergence rate, where represents the number of workers, and is the number of iterations. This convergence rate is on par with non-momentum SGD methods in distributed settings but with significantly reduced communication complexity.

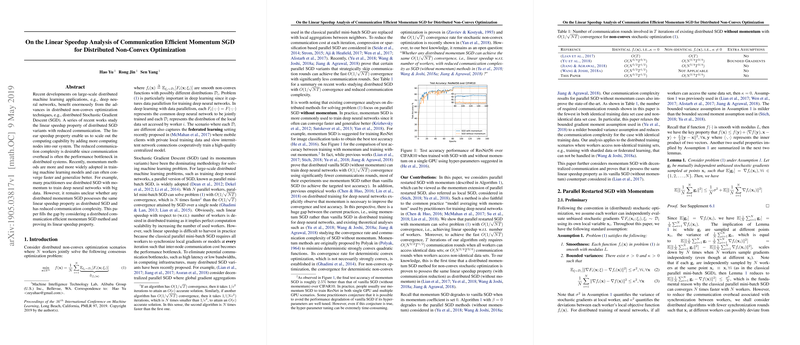

- Communication Complexity: The paper shows that the proposed algorithm achieves a reduction in the required number of communication rounds to for identical data and to for non-identical data. This reduction surpasses previous works, providing a practical advantage in distributed environments where communication is costly.

- Decentralized Communication Model: Beyond centralized communication strategies, the paper extends the framework to decentralized communication models. It demonstrates that decentralized momentum SGD maintains the same linear speedup capabilities, which drastically enhances its applicability in scenarios with unreliable network conditions or heterogeneous networks, characteristic of federated learning settings.

Empirical Validation

Extensive experiments validate the theoretical results. The proposed methods are empirically tested on tasks involving deep neural networks and databases such as CIFAR-10 and ImageNet, showcasing the practical benefits of reduced communication rounds without compromising convergence speed or accuracy.

Implications and Future Work

The implications of this work are profound for large-scale machine learning applications. By effectively incorporating momentum into distributed SGD, researchers and practitioners can achieve faster and more efficient model training across distributed systems without the prohibitive costs associated with frequent communication. This presents a path toward scaling machine learning applications even further.

For future research, it would be valuable to explore:

- Additional variants of momentum methods that might offer even greater reductions in communication complexity.

- Robustness of the proposed methods in highly heterogeneous environments typical in federated learning.

- Extension of the framework to other forms of stochastic optimization beyond those used in neural networks.

Conclusion

This paper provides a comprehensive framework for utilizing momentum SGD in distributed non-convex optimization scenarios, achieving both theoretical and practical advancements in computational scalability and communication efficiency. Such developments represent a crucial step forward in the capability to handle increasingly larger datasets and complex models in distributed machine learning environments.