An Overview of Meta-Sim: Generating Synthetic Datasets for Improved Downstream Task Performance

The paper "Meta-Sim: Learning to Generate Synthetic Datasets" presents a methodology for generating synthetic datasets aimed at enhancing downstream task performance while minimizing the domain gap between synthetic and real-world data. This work addresses the critical challenge of data availability and labeling costs in machine learning, particularly when dealing with large labeled datasets necessary for high-performance models.

Core Contributions

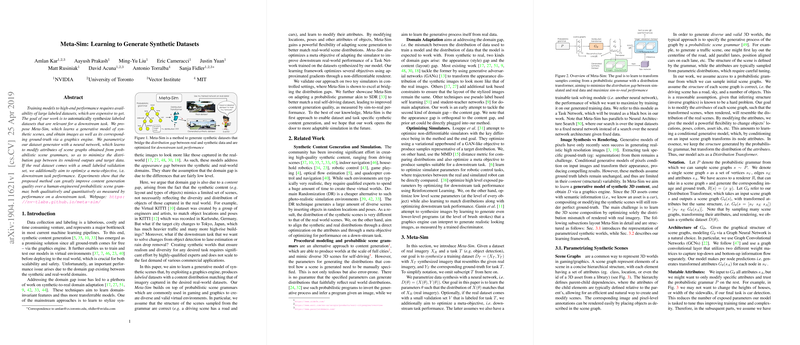

The authors propose Meta-Sim, a framework designed to generate highly relevant synthetic datasets tailored to specific downstream tasks. Meta-Sim employs a generative model parameterized by a neural network, which modifies the attributes of scene graphs derived from probabilistic grammars. This modification is aimed at aligning the synthetic dataset distribution with the target real-world data distribution. Importantly, Meta-Sim takes into account a potential small real-world validation set to optimize the synthetic data for direct task performance.

Methodology

The paper presents a detailed architectural design and training pipeline for Meta-Sim:

- Scene Graphs and Probabilistic Grammars: Scene graphs, a structured representation of 3D worlds with hierarchical dependencies among scene elements, are modified using probabilistic grammars. Meta-Sim's neural network adjusts these graphs to better match the diversity and layout seen in real-world datasets.

- Distribution Matching and Task Optimization: Meta-Sim introduces a novel joint objective for training that includes distribution matching using Maximum Mean Discrepancy (MMD) and a meta-objective of downstream task performance. The latter leverages a task network trained on the synthetic data, with the performance optimizing the attributes of the scene graph.

- Empirical Validation: The framework was validated across toy datasets (MNIST-like synthesized data) and more complex scenarios like self-driving car datasets (simulated KITTI datasets). The experiments demonstrated significant improvements in task performance, indicating that the synthetic datasets generated by Meta-Sim closely resemble the target real-world distribution.

Implications and Future Directions

The implications of this work are significant for machine learning and artificial intelligence. Primarily, it offers a framework for reducing the cost and time associated with labeling datasets by automating the generation of synthetic datasets that are highly task-specific and domain-relevant. This is particularly valuable for commercial and industrial applications where diverse datasets are constantly needed to accommodate various practical tasks, such as autonomous driving, where real-world data availability is a bottleneck.

Looking forward, future research avenues include enhancing the flexibility of the probabilistic grammars to dynamically adapt to varying task requirements and integrating Meta-Sim with differentiable renderers, which may offer more refined adjustments to scene attributes. Additionally, further exploration into handling highly variable domains and multimodal distributions could expand the applicability of Meta-Sim to broader AI tasks.

In conclusion, Meta-Sim lays a robust foundation towards intelligently bridifying the gap between simulated and real-world data distributions, offering a scalable path for continuous improvement in model training pipelines.