Towards VQA Models That Can Read: An Analysis

The paper "Towards VQA Models That Can Read" introduces significant strides towards endowing Visual Question Answering (VQA) systems with the ability to read and reason about text within images. The research identifies a critical limitation in contemporary VQA models: their inability to process textual content embedded within images, a capability frequently needed in practical applications such as assisting visually impaired users.

Introduction to TextVQA and LoRRA

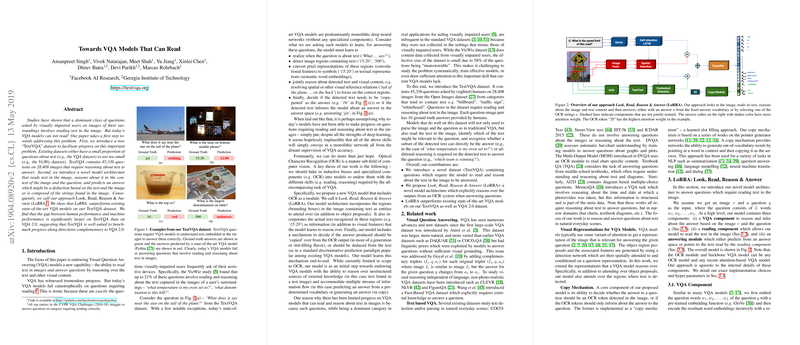

The authors address the inherent shortcomings of existing VQA datasets, which either contain minimal text-based questions or are too small to be practically useful. To overcome this, they introduce TextVQA, a robust dataset comprising 45,336 questions based on 28,408 images. These images are sourced from the Open Images dataset, specifically targeting categories known to include textual elements, e.g., "billboard" and "traffic sign". This curated dataset is specifically geared to foster advancements in text-based VQA by ensuring most questions require text recognition and reasoning.

The LoRRA Model Architecture

Central to this paper is the introduction of Look, Read, Reason (LoRRA), an innovative model architecture that integrates Optical Character Recognition (OCR) as a module within the VQA framework. LoRRA utilizes bounding boxes within images to detect and extract text, subsequently using this textual information for answering questions. The architecture allows the model to:

- Identify text-centric questions.

- Extract and parse textual content within images.

- Integrate visual and textual data to reason and derive answers.

- Decide if the answer should be directly copied from the detected text or deduced from a fixed vocabulary of answers.

This multi-faceted approach ensures that the model does not solely rely on learning all required skills from large-scale, monolithic networks but incorporates specialist components that leverage advances in OCR technology.

Strong Numerical Results

The LoRRA model significantly outperforms existing VQA models on the TextVQA dataset. Specifically, Pythia v0.3, when enhanced with LoRRA, achieves a validation accuracy of 26.56%, compared to 13.04% for Pythia without the integrated reading module. These results are particularly noteworthy given the dataset's complexity and the unique challenges posed by text in images.

The paper also highlights that human performance on the TextVQA dataset is around 85%. This gap between human performance and the LoRRA model underscores the difficulty of the task and the room for improvement in VQA systems' text-handling capabilities.

Implications and Future Directions

The implications of integrating text-reading capabilities into VQA models are vast:

- Practical Applications: Enhancements in text-centric VQA models can significantly improve assistive technologies for visually impaired users, for example, reading prescription labels or identifying bus numbers.

- Benchmarking and Dataset Creation: The TextVQA dataset serves as a new benchmark, fostering further advancements and standardizing evaluation protocols in the VQA research community.

Future research can explore:

- Improved OCR Integration: Leveraging more advanced OCR systems can further boost accuracy. Current OCR modules still miss text portions, especially under challenging conditions like occlusions or rotations.

- Dynamic Answer Generation: Developing models that generate answers dynamically, possibly through sequence-to-sequence learning, to handle multi-token answers and reduce reliance on a fixed vocabulary.

- Cross-dataset Learning: Improving model generalizability across diverse datasets to handle varied text representations and contexts.

Conclusion

"Towards VQA Models That Can Read" addresses a critical gap in the field of Visual Question Answering by proposing the TextVQA dataset and the LoRRA model. The research sets a precedent for combining visual and textual data within VQA systems and shows promising improvements over existing models. With substantial numerical results and a clear pathway for future research, this work makes a notable contribution to the field of AI and computer vision, specifically in enhancing the utility and accuracy of VQA systems in real-world applications.