Heterogeneous Graph Attention Network

The paper "Heterogeneous Graph Attention Network" by Wang et al. presents a novel approach designed to enhance the task of learning representations from heterogeneous graphs. Graphs, representing entities and their relationships, are pervasive structures in many real-world datasets, such as social networks and citation networks. The nature of these graphs is typically heterogeneous, containing multiple types of nodes and edges with rich semantic information. Addressing this complexity presents challenges not fully tackled by traditional homogeneous Graph Neural Networks (GNNs).

Introduction to Heterogeneous Graph Neural Networks

Traditional GNNs, including convolutional models like GCN and GraphSAGE, perform well on homogeneous graphs where nodes and edges are of a single type. However, they fall short when applied directly to heterogeneous graphs due to the intricate nature and varied semantic content of these graphs. Recent advancements, such as Graph Attention Networks (GAT), introduce attention mechanisms to enhance model focus on the significant parts of data but have not been widely adapted for heterogeneous settings.

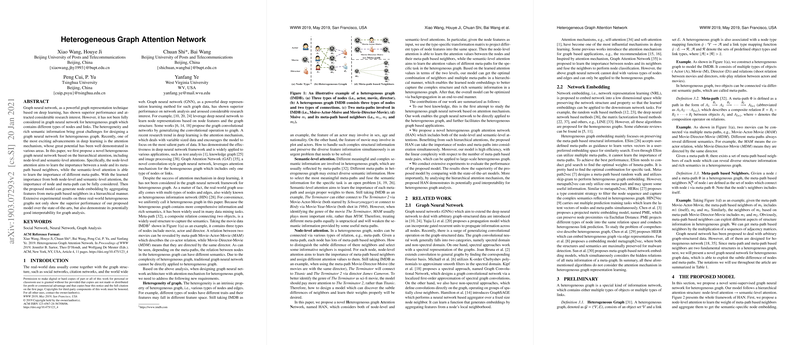

Overview of HAN

The authors introduce the Heterogeneous graph Attention Network (HAN), an innovative approach incorporating hierarchical attention mechanisms at both node and semantic levels. This dual attention setup enables comprehensive learning from heterogeneous graphs by capturing the nuanced semantics and importance of different meta-paths — sequences of relations connecting nodes, such as Movie-Actor-Movie in film datasets.

Node-Level Attention

The node-level attention mechanism in HAN considers the importance of a node's neighbors under specific meta-paths. The process starts by projecting node features into a unified space via type-specific transformation matrices. Then, using a self-attention mechanism, the importance of each meta-path-based neighbor is calculated, normalized, and aggregated, enabling the network to emphasize influential neighbors while downplaying less relevant ones.

Semantic-Level Attention

HAN extends beyond node-level attention by incorporating semantic-level attention to weight the significance of different meta-paths. It achieves this by transforming the semantic-specific embeddings through a nonlinear function and measuring their importance against a semantic-level attention vector. The final node embeddings are derived as a weighted combination of these semantic-specific embeddings, taking into account the meta-paths' relative importance.

Experimental Results

The authors conducted extensive experiments on real-world heterogeneous graphs from the DBLP, ACM, and IMDB datasets. The performance was measured through node classification and clustering tasks, comparing HAN against state-of-the-art models including DeepWalk, metapath2vec, ESim, and GAT. HAN consistently outperformed other models:

- Classification: In terms of Macro-F1 and Micro-F1 scores, HAN showed superior performance across all datasets. Particularly, it achieved notable improvements on ACM and IMDB datasets, underscoring the model's strength in leveraging the hierarchical semantics.

- Clustering: The clustering tasks, evaluated using normalized mutual information (NMI) and adjusted rand index (ARI), further reinforced HAN's effectiveness. The model demonstrated high intra-class similarity and clear separation of distinct classes.

Theoretical and Practical Implications

The hierarchical attention in HAN introduces significant practical benefits by providing enhanced interpretability and efficiency. The model's ability to assign meaningful weights to nodes and meta-paths avails deeper insights into the relationships in heterogeneous graphs, facilitating better decision-making and analysis in applications like recommendation systems and social network analytics. Efficiency-wise, HAN scales linearly with the number of nodes and meta-path pairs, making it applicable to large-scale heterogeneous graphs.

Future Directions

The paper paves the way for several future research directions:

- Scalability Enhancements: Further optimizing HAN to handle even larger graphs efficiently.

- Generalization and Adaptability: Extending HAN's applicability to other real-world datasets across various domains.

- Integration with Other Models: Combining HAN with other advanced GNNs for enriched representation and more nuanced understanding of heterogeneous data.

Conclusion

Wang et al.'s proposed Heterogeneous graph Attention Network represents a significant stride in the domain of graph neural networks by adeptly handling the complexities of heterogeneous graphs. Through hierarchical attention mechanisms, HAN effectively learns and integrates node and semantic level attentions, leading to superior performance in essential tasks such as classification and clustering. The interpretability and efficiency of HAN highlight its potential for broad applications in analyzing semantically rich and structurally complex graphs.