Comparative Analysis of Faster R-CNN and YOLOv3 for Car Detection in UAV Imagery

The paper under examination critically evaluates two contemporary CNN-based object detection algorithms, Faster R-CNN and YOLOv3, within the context of car detection from aerial images captured by UAVs. The primary focus is on determining the efficacy of these algorithms in terms of precision, sensitivity, and processing time, elements crucial for real-time traffic monitoring and surveillance tasks.

Theoretical Insights into Faster R-CNN and YOLOv3

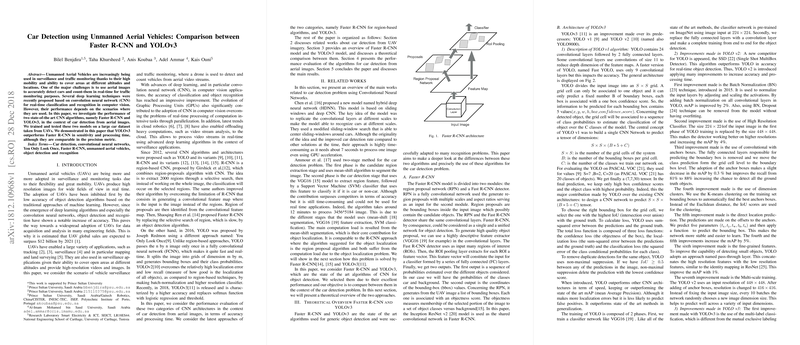

Faster R-CNN is an evolution of the R-CNN family, combining region-proposal networks and Fast R-CNN detectors to form a unified architecture. This approach enhances the feature map with region proposals that are rapidly generated by the RPN, which then feeds into a Fast R-CNN model to refine detections. Its Inception ResNet v2 backbone facilitates feature extraction with significant efficiency.

On the other hand, YOLOv3 approaches object detection through a different paradigm, using a fully convolutional network to process the entire image at once, enabling remarkable real-time performance. The architecture's Darknet-53 backbone supports feature extraction across multiple scales, improving its capability for detecting small objects, while logistic regression replaces softmax for class prediction, allowing for multi-label classification.

Experimental Evaluation

The empirical evaluation employed a UAV dataset consisting of 270 images, partitioned into a training set and a test set. The Faster R-CNN model was trained using TensorFlow's Object Detection API, leveraging stochastic gradient descent with precise hyperparameters, whereas YOLOv3 was trained using its default configuration and fine-tuned for the specific dataset at hand.

Performance metrics were measured using precision, recall, F1 score, and processing time. The results crystallize YOLOv3's superiority in terms of recall (99.07% compared to 79.40% for Faster R-CNN), suggesting that YOLOv3 is more adept at capturing all instances of car objects in the test images. Although precision rates were comparably high between both algorithms, YOLOv3 showed a marked advantage in processing time, achieving an average detection duration of 0.057 ms versus Faster R-CNN's 1.39 seconds. This significant disparity highlights YOLOv3's suitability for real-time applications, where rapid processing is crucial.

Implications and Future Considerations

This analysis underscores the prevalent trend towards utilizing end-to-end deep learning solutions like YOLOv3 for real-time object detection in dynamic environments such as UAV-based traffic surveillance. The enhanced recall and processing speed of YOLOv3 suggest its applicability in scenarios requiring quick and comprehensive coverage, albeit with the condition that further modifications could be explored to enhance precision and reduce false positive rates.

The paper also posits potential future work including expanding the detection scope to include multiple types of vehicles, integrating variations in lighting conditions, and considering diverse environmental settings. Such advancements could further substantiate UAV-based monitoring systems' utility across broader operational contexts.

Overall, the findings provide crucial insights for researchers and practitioners aiming to optimize object detection algorithms for UAV platforms. By elucidating the strengths and limitations of Faster R-CNN and YOLOv3, the paper effectively guides future endeavors toward tailored adaptations and enlists components that could be refined to bolster detection performance in practical traffic surveillance applications.