Mixed Precision Quantization of ConvNets via Differentiable Neural Architecture Search

The paper "Mixed Precision Quantization of ConvNets via Differentiable Neural Architecture Search" presents a sophisticated approach to compress convolutional neural networks (ConvNets) by assigning mixed precision levels to different layers. This is achieved using a novel and efficient framework known as Differentiable Neural Architecture Search (DNAS). This approach addresses the need for reducing computational cost and model size, which is especially critical for deploying models on resource-constrained devices like mobile phones and embedded systems.

Key Contributions

The primary contribution of the paper is the introduction of DNAS, a differentiable architecture search method which optimizes layer-wise precision assignments using gradient-based techniques rather than exhaustive search methods. This approach significantly reduces the search space, making the process computationally feasible even on large datasets. The main innovations include:

- Mixed Precision Quantization Model: Unlike traditional quantization methods that use uniform bit-widths for all layers, this paper proposes assigning different bit-widths to various layers, depending on their impact on network performance and size. This mixed precision model accommodates the varying sensitivity of network layers to quantization.

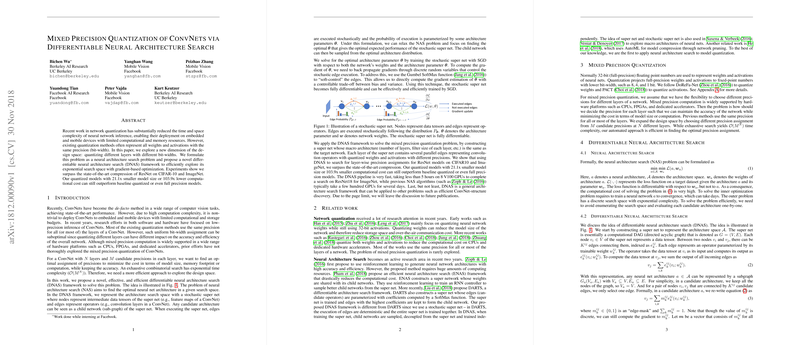

- Differentiable Neural Architecture Search (DNAS): The DNAS framework leverages a stochastic super network to represent all possible architectures within a predefined search space. Through the use of Gumbel Softmax, a gradient-friendly approximation is obtained, effectively allowing differentiable optimization of architecture parameters to pinpoint the best configuration.

- Fast Search Process: DNAS demonstrates significant computational efficiency, completing a full search on ResNet18 for ImageNet in less than five hours with 8 V100 GPUs, compared to days required by other Reinforcement Learning based NAS approaches.

- Extensive Experiments: The paper provides rigorous experiments displaying how their quantized models, when applied to ResNet structures on CIFAR-10 and ImageNet datasets, outperform existing full precision and other quantized baseline models significantly in terms of model size and computational cost.

Results and Implications

The paper reports compelling quantitative achievements, with mixed precision quantized ResNet models reaching up to 21.1x compression in model size or 103.9x in computational cost reduction, while maintaining accuracy comparable to or better than full precision models. For instance, ResNet18 quantized using DNAS achieved superior accuracy than its full precision counterpart on the ImageNet dataset while reducing model size by a factor of 11.2.

These results have substantial implications both practically and theoretically. From a practical standpoint, the ability to drastically reduce computational and model size demands without sacrificing accuracy enables real-world application of state-of-the-art neural networks on devices with limited processing capabilities and memory. Theoretically, this work opens new possibilities in architecture search, challenging the conventional paradigms where exhaustive search methods dominated.

Future Directions

The DNAS framework itself offers extensibility beyond mixed precision quantization to other neural architecture search problems, suggesting potential exploration in efficient ConvNet structure discovery. Future research could expand on finding optimal architectures for other types of neural networks such as recurrent or transformer models and could further integrate with hardware-specific optimizations ensuring that these quantified models better align with real-world deployment on diverse hardware architectures.

Overall, this paper significantly advances the approach to ConvNet quantization by marrying the concepts of neural architecture search and precision optimization using differentiable methods, setting a precedent for future research in neural network efficiency improvement.