An Evaluation of the WikiHow Large-scale Text Summarization Dataset

The paper "WikiHow: A Large Scale Text Summarization Dataset" introduces a significant contribution to the domain of NLP and, specifically, text summarization. Authored by Mahnaz Koupaee and William Yang Wang, the paper presents a novel dataset for text summarization derived from the WikiHow online knowledge base. With over 230,000 article and summary pairs, the WikiHow dataset is designed to address limitations observed in existing datasets, namely the predominance of news articles and their specific stylistic features, as well as limitations in size and abstraction levels.

Summary and Motivation

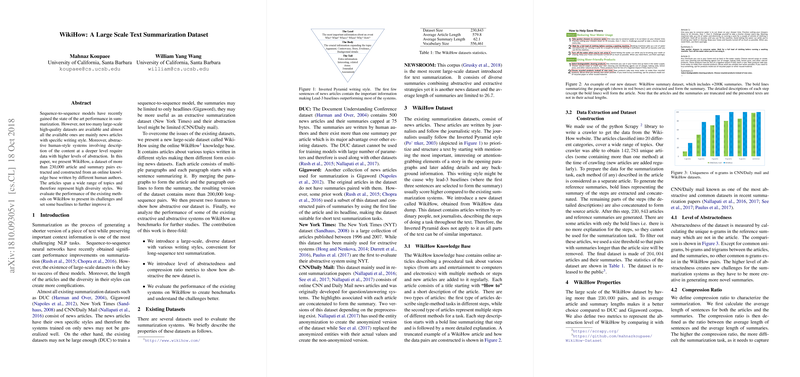

Text summarization is an essential task in NLP, where sequence-to-sequence models have achieved state-of-the-art results. However, progress in this area has been constrained by the availability of large and diverse datasets. Existing datasets such as DUC, Gigaword, New York Times, CNN/Daily Mail, and NEWSROOM primarily consist of news articles that employ the "Inverted Pyramid" writing style, leading to issues with generalization and abstraction in developed models. The WikiHow dataset broadens the diversity of content by incorporating procedural articles on a wide range of topics authored by non-journalists. This approach circumvents the limitations inherent in news-based datasets and strengthens the dataset's utility for training models intended to handle varied writing styles and abstraction levels.

Key Contributions

- Dataset Construction: WikiHow is based on step-by-step explanatory articles from the WikiHow platform, incorporating articles across diverse topics such as arts, entertainment, and electronics. An automatic extraction process was employed to create the dataset, aligning article content with corresponding summary outputs in a format conducive to both extractive and abstractive summarization techniques.

- Abstraction and Compression Metrics: The authors introduce metrics to analyze reconstruction difficulty and abstraction: the level of abstractedness, determined by unique n-grams in summaries not present in the articles, and the compression ratio, reflecting the level of reduction from article content to summary form. These metrics quantifiably demonstrate that WikiHow offers a higher challenge level for abstraction-oriented systems, compared to other datasets like CNN/Daily Mail.

- Benchmarks: By evaluating existing summarization techniques on WikiHow, including TextRank, sequence-to-sequence models with attention, and the pointer-generator models, the paper establishes baseline performance and highlights the challenges posed by the dataset. The results indicate that while existing systems often achieve high scores on traditional datasets, the WikiHow dataset requires improvements in handling diverse and abstract content.

Implications and Future Directions

The introduction of the WikiHow dataset is significant as it offers a new large-scale resource with varied content and writing styles. As a dataset that deviates from the news-centric scope of previous resources, WikiHow enables the exploration of models capable of operating under varied abstraction requirements and summarizing complex procedural texts. Researchers can use this dataset to improve model generalization capabilities and to develop novel techniques that can effectively handle the higher levels of complexity and abstraction characterizing its data.

In theoretical terms, WikiHow's abstraction properties offer a practical testbed for advancing the capabilities of abstractive summarization models and investigating strategies such as content synthesis and creative text generation. Future research directions may include leveraging the dataset to develop improved modeling strategies, such as hierarchical attention mechanisms or graph-based methods that better understand and summarize procedural content.

In conclusion, the WikiHow dataset serves as a substantial addition to the text summarization corpus, one that encourages advancements in NLP and supports the development of systems more adept at dealing with a broader array of textual structures and abstraction levels. This initiative is likely to inspire further research and contributions to the field, as NLP continues to evolve toward more universally compatible language understanding systems.