The paper "Automatic Judgment Prediction via Legal Reading Comprehension" focuses on enhancing the accuracy of automated judgment prediction systems in civil law cases by introducing a framework known as Legal Reading Comprehension (LRC). This research moves beyond traditional text classification models by formalizing the task as a reading comprehension problem that leverages the complex semantic interactions between case materials and legal statutes.

Key Contributions:

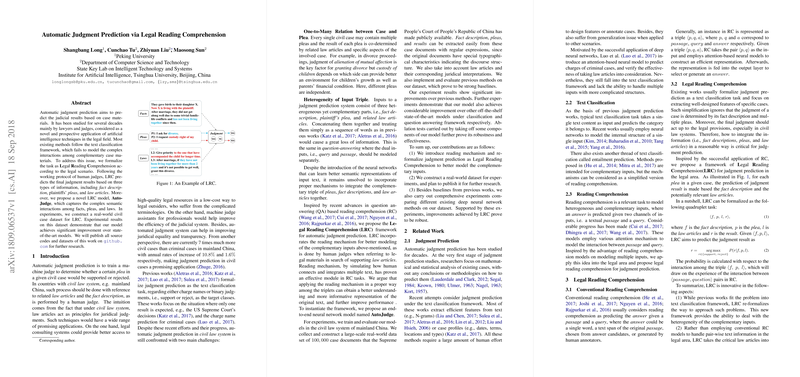

- Framing the Problem as Legal Reading Comprehension (LRC): The authors reformulate judgment prediction as a legal reading comprehension task, a paradigm shift from mere text classification. This approach models the judicial decision-making process by considering the interactions between three types of information: fact descriptions, plaintiffs' pleas, and relevant law articles.

- Introduction of AutoJudge Model: A novel model named AutoJudge is proposed to instantiate the LRC framework. This model utilizes pair-wise mutual attention mechanisms to capture and process the semantic interactions between the heterogeneous inputs of fact descriptions, pleas, and law articles. The reading comprehension design is inspired by question answering systems, adopting a similar attention-based approach to enhance interpretability and accuracy.

- Dataset Construction: The authors collect and preprocess a substantial dataset consisting of 100,000 real-world civil cases from the Supreme People's Court of China’s database. The dataset is specifically designed to reflect diverse pleas and judicial decisions, especially in divorce proceedings, which are characterized by multiple independent issues such as custody and the granting of divorce.

Methodological Insights:

- Text Encoding and Pair-Wise Attentive Reader:

The model begins with encoding input texts using bidirectional GRUs tailored for fact descriptions, pleas, and law articles. A pair-wise attentive reader module is then employed, which utilizes mutual attention to glean relevant information from facts regarding each plea or law article, enhancing the representation learned by the neural network.

- Output Layer:

A convolutional neural network (CNN) layer processes the concatenated outputs of the attentive reader, aiming to capture local structures in the text for final prediction. This layer is critical in summarizing the sequence interactions imitating human-like reading and decision-making processes.

Experimental Results:

The proposed AutoJudge model demonstrates superior performance compared to various state-of-the-art baselines, both in the realms of neural text classification and reading comprehension models applied to legal texts. The improvements are noted in terms of precision, recall, F1-score, and accuracy, highlighting the effectiveness of integrating law articles through an attention mechanism.

Ablation Studies and Observations:

- Importance of Reading Mechanisms:

The flexibility and appropriateness of the reading mechanism are underscored by substantial declines in performance when it is omitted. The attention mechanism contributes significantly by integrating law articles effectively.

- Role of Law Articles:

While the outright inclusion of law articles is beneficial, their preprocessing and selection are crucial. Experiments reveal that using ground-truth articles or filtering using unsupervised methods improves the model performance.

- Data Preprocessing:

Strategies such as name replacement and law article filtration are shown to further enhance the model's capacity to generalize and predict judicial outcomes.

In conclusion, the paper not only proposes a novel methodological framework but also provides empirical evidence supporting the effectiveness of Legal Reading Comprehension models over traditional approaches, paving the way for more nuanced applications in the automation of legal judgments. Future work may expand toward handling more complex judgment forms and exploring additional civil case scenarios.