CoQA: A Conversational Question Answering Challenge

The paper "CoQA: A Conversational Question Answering Challenge," authored by Siva Reddy, Danqi Chen, and Christopher D. Manning, introduces a new dataset to advance conversational question answering systems. CoQA stands out due to its focus on multi-turn, conversational interactions for information gathering, involving a sequence of interconnected Q&A pairs about textual passages. This dataset was created to facilitate the development and benchmarking of systems that can handle dialogues derived from diverse domains.

Key Characteristics of CoQA

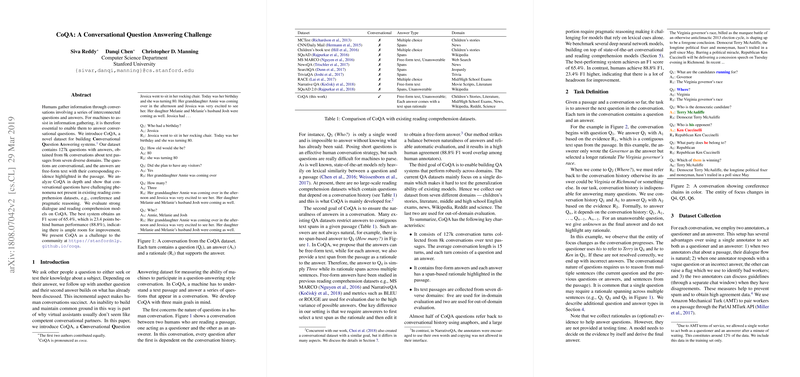

CoQA comprises 127,000 questions with corresponding answers generated from conversations based on passages from seven varied domains. The dataset includes:

- Children’s stories, Literature, Middle/High school exams, News, Wikipedia, Reddit, and Science.

- Each Q&A turn includes a free-form text answer and a corresponding evidence span from the passage.

- The conversations are structured to capture complexities such as coreference and pragmatic reasoning.

- The dataset is partitioned into training, development, and test sets, with Reddit and Science reserved for out-of-domain evaluation.

Dataset Analysis

CoQA is unique among reading comprehension datasets due to its conversational nature. Unlike datasets such as SQuAD, CoQA's questions frequently depend on conversation history, involving anaphora and pragmatic reasoning. An analysis reveals:

- 33.2% of answers do not overlap verbatim with a passage, highlighting the necessity for systems to generate fluent, accurate responses.

- The dataset promotes robust QA systems by spanning multiple domains, thereby testing generalization capabilities.

- CoQA's free-form answers necessitate a balance between natural answer generation and span-based evaluation metrics, evidenced by the method of providing both free-form answers and their rationales.

Experimental Evaluations

The paper benchmarks several models to evaluate their performance on CoQA:

- Seq2Seq Model: Exhibited the lowest performance due to its tendency to generate frequent but contextually irrelevant answers.

- Pointer-Generator Network (PGNet): Showed improvement over Seq2Seq by enabling copying mechanisms from the passage.

- Document Reader (DrQA): Achieved higher performance by restricting answers to text spans from passages but struggled with non-overlapping answers.

- DrQA with Yes/No Augmentation: Enhanced to recognize yes/no answers, outperforming vanilla DrQA significantly.

- Combined Model (DrQA+PGNet): Achieved competitive performance by using DrQA's span prediction to guide PGNet's answer generation, showing the efficacy of combining span prediction with answer generation.

The best-performing model achieved an F1 score of 65.4, while human annotators scored 88.8, indicating substantial room for improvement. Interestingly, models struggled more with domains characterized by complex language or less frequent training examples but performed better on well-represented domains such as Wikipedia.

Implications and Future Directions

The introduction of CoQA represents a critical step towards enhancing the capability of QA systems to handle multi-turn conversations. The inclusion of diverse domains and the focus on conversational dependencies push the boundaries of existing models, encouraging the development of sophisticated algorithms that integrate coreference resolution, contextual understanding, and natural language generation.

Future research stimulated by CoQA could involve:

- Advancements in multi-turn reasoning and memory models to capture long-term dependencies in dialogues.

- Improved evaluation metrics that account for answer fluency and semantic correctness rather than mere word overlap.

- Exploration of transfer learning techniques to better handle out-of-domain questions by leveraging pretrained LLMs like BERT.

In summary, CoQA provides a challenging benchmark that highlights crucial areas for advancements in conversational AI, aiming to bring machine comprehension closer to human-like dialogue capabilities. The promising preliminary results, alongside identified deficiencies, pave the way for innovative research to bridge the performance gap between current AI systems and human annotators.